Artificial intelligence has become the nervous system of modern business. It powers personalization, improves operations, detects fraud, and automates tasks at scale. But there’s an uncomfortable truth executives are starting to face: the very data that would make AI systems more effective often falls into the category companies cannot legally access.

On one side lies the promise of hyper-personalization and predictive intelligence. On the other hand, we see the growing wall of global data protection laws: GDPR in Europe, CCPA in California, and dozens of emerging frameworks worldwide. The collision of these forces has created one of the defining business challenges of the decade: how can companies leverage AI without overstepping legal boundaries and customer trust?

This article unpacks why AI craves restricted data, the risks businesses face when attempting to use it, and the strategies forward-thinking organizations are adopting to remain both competitive and compliant. In this article, you will discover:

- Why modern AI systems crave highly personal and contextual data and why it’s often off-limits.

- The legal, reputational, and operational risks businesses face when handling restricted data.

- The paradox of personalization: why customer expectations collide with compliance boundaries.

- Emerging technical solutions such as synthetic data, federated learning, and differential privacy and their limitations.

- Strategies leading companies use to build trust-centric AI that balances innovation with regulation.

- Practical steps executives can take to align AI ambitions with global data protection laws.

- How Mitrix helps organizations deploy privacy-first AI that is both powerful and compliant.

Why AI needs the “forbidden” data

AI is only as good as the data it trains on and the context it operates within. To deliver real value, machine learning systems thrive on information that is:

- Richly contextual. Purchase history, browsing behavior, and location data enable predictive models to anticipate customer needs.

- Highly personal. Sensitive attributes (health records, biometric data, financial histories) unlock personalization at a near-individual level.

- Cross-platform. Connecting identities across devices and channels creates a 360-degree customer view.

Here’s the paradox: much of this data is now classified as personally identifiable information (PII) or sensitive personal data. Accessing, storing, or processing it without explicit and lawful consent is prohibited. Yet, without it, many AI models perform poorly, lacking the context to make accurate predictions or provide relevant recommendations.

For example:

- Retailers could double recommendation accuracy with granular behavioral tracking, but cookie restrictions and consent banners make it harder.

- Banks could reduce fraud losses with biometric data, but regulatory frameworks impose strict guardrails on how that information can be collected and stored.

- Healthcare providers could accelerate diagnosis with aggregated patient histories, but HIPAA and GDPR sharply restrict sharing across institutions.

AI systems “want” more data than regulators allow. That tension drives today’s innovation, but also leaves room for missteps.

The legal tightrope: where AI meets compliance

Obviously, executives can’t afford to treat this as just a technical issue. Mishandling data creates not only regulatory risks but also reputational damage that directly impacts market value.

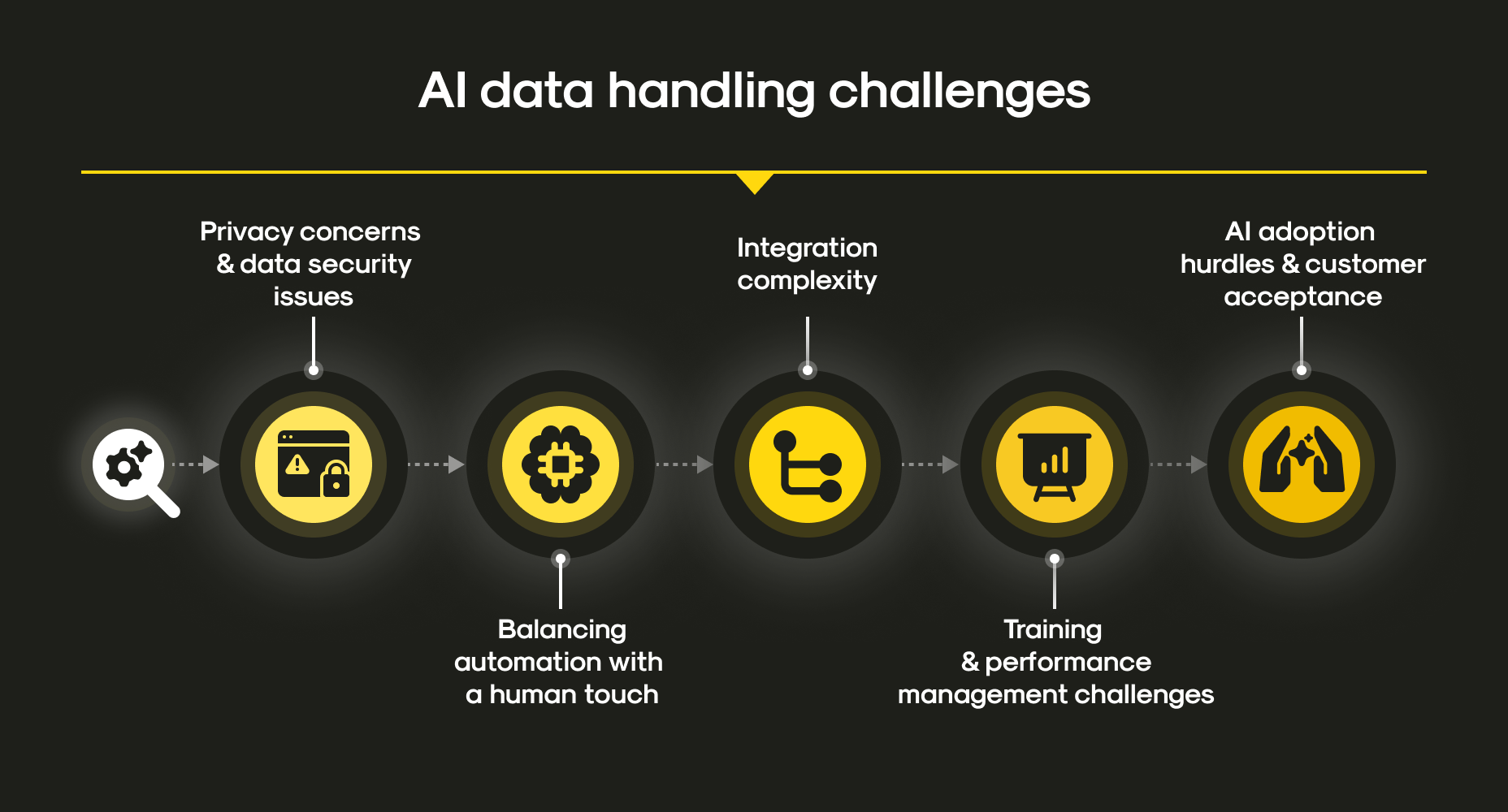

AI data handling challenges

1. Regulatory risks

- GDPR fines can reach up to 4% of global annual turnover. Several global brands have already faced penalties in the hundreds of millions.

- CCPA enforcement allows California residents to sue companies for data misuse, even without demonstrating financial loss.

- Sector-specific rules (HIPAA, PCI DSS, PSD2) add extra layers of complexity.

2. Trust erosion

Consumers are increasingly aware of their digital rights. A single headline about improper AI-driven data collection can undo years of brand building.

3. Operational risk

One thing is certain: data that is collected improperly can contaminate AI models. If regulators later determine that data shouldn’t have been used, retraining may be required, leading to sunk costs and product delays.

Business pressures: why the temptation persists

If the risks are so high, why do businesses still push for restricted data?

- Competitive advantage. Personalization is proven to increase conversion and retention. Companies that hold back may fall behind rivals that stretch the limits.

- Investor pressure. Growth-hungry stakeholders want faster scaling through AI-powered customer intelligence.

- Customer expectations. Ironically, customers demand convenience (“show me what I need before I ask”) but resist sharing the data required to make it happen.

This is a sort of Catch-22, if you will: to compete, businesses need personalization, but to stay compliant, they must restrict the very data that enables it.

Technical workarounds (and their limitations)

Companies are experimenting with technical strategies to bridge this gap. While promising, each carries trade-offs.

1. Synthetic data

Firms generate artificial datasets statistically similar to real data but without exposing individuals. This enables AI training without legal risk. However, synthetic data can fail to capture rare but critical edge cases, reducing effectiveness in high-stakes scenarios like fraud detection.

2. Federated learning

This way, instead of pooling raw data, train models locally on devices or servers, when only the model updates are shared. Google and Apple already use this for predictive text and voice recognition. It minimizes exposure but requires advanced infrastructure and careful orchestration.

3. Differential privacy

By adding mathematical “noise” to datasets, organizations can obscure individual records while preserving aggregate insights. The challenge: adding too much noise degrades accuracy, while too little risks privacy leaks.

4. Consent management platforms (CMPs)

Sophisticated CMPs allow users to manage consent dynamically. While this increases transparency, it introduces friction. Every “reject all cookies” click shrinks the dataset.

Strategic shifts: building trust-centric AI

In 2025, forward-looking businesses are reframing the issue. Instead of asking, “How can we get around regulations?” they ask, “How can we design AI that customers actually want to share data with?”

1. Transparency as a competitive edge

In fact, companies that clearly explain what data is collected, why, and how it benefits the customer can win trust. For instance, some retailers explicitly show how sharing purchase history leads to better discounts.

2. Value exchange models

Customers are more likely to share data if they receive tangible value in return: exclusive perks, personalized services, or faster experiences. Think loyalty programs designed for the age of data privacy.

3. Privacy-first architecture

Embedding privacy into AI workflows from day one (“privacy by design”) reduces long-term risk. This means minimizing data collection, anonymizing by default, and encrypting wherever possible.

4. Collaborative ecosystems

Industries like healthcare and finance are experimenting with secure data-sharing consortia. Shared governance models allow pooling sensitive data in ways regulators approve.

Executive playbook: navigating the data dilemma

For decision-makers, the task is less about finding a silver bullet and more about balancing opportunity with responsibility. Here’s a practical framework:

- Audit current data practices. Map what data is collected, where it flows, and how it feeds into AI systems. Many executives are surprised by hidden shadow data pipelines.

- Align AI goals with compliance. Define what business outcomes AI must achieve, then check whether they rely on restricted data.

- Invest in privacy-enhancing tech. Explore federated learning, synthetic data, and advanced encryption. These aren’t just defensive moves: they can become differentiators in the future.

- Elevate governance. Establish cross-functional data governance boards, combining legal, technical, and business voices.

- Communicate proactively. Don’t wait for regulators or journalists to raise questions. Be transparent in annual reports, user interfaces, and customer communications.

Looking ahead: regulations will tighten, not loosen

Executives hoping for a regulatory rollback are in for disappointment. The trend is clear: governments worldwide are strengthening data protection laws.

- The EU AI Act will classify some AI applications as “high-risk,” subjecting them to stricter oversight.

- U.S. federal regulators are drafting AI-specific frameworks.

- Emerging markets are introducing local data residency requirements, forcing companies to store and process data domestically.

The message is unmistakable: the era of “collect everything and apologize later” is over.

How Mitrix can help

At Mitrix, we build AI agents designed around your needs, whether you’re looking to boost customer support, unlock insights from data, or streamline operations. At the same time, we know the tension businesses face: AI thrives on data, but regulations limit what you can access, store, and use. Our role is to help companies innovate without crossing legal or ethical red lines. Here’s how we do it:

- Privacy-first architecture. Mitrix engineers design systems that prioritize data minimization, encryption, and compliance by default, so your AI models never overreach.

- Synthetic and federated data solutions. When customer data isn’t legally accessible, our team helps businesses use synthetic datasets, anonymized models, and federated learning to keep training pipelines alive without compromising privacy.

- Custom compliance frameworks. Every business operates under different regulations (GDPR, CCPA, HIPAA, etc.). We build AI systems that align with your sector’s exact legal requirements.

- Scalable integration. Instead of bolting on compliance as an afterthought, Mitrix integrates privacy-aware AI workflows directly into your existing tech stack, ensuring efficiency and security from day one.

- Ongoing governance. Laws change and markets shift. We provide continuous support so your AI evolves with compliance standards, not against them.

Bottom line: You don’t need to choose between innovation and regulation. You can deploy AI that is powerful, ethical, and legally sound, so your competitive edge doesn’t come with hidden risks.

Summing up

AI thrives on data, but not all data is fair game. The most powerful insights often lie in information businesses cannot legally or ethically touch. Companies that chase short-term gains by exploiting gray areas risk regulatory fines, reputational harm, and long-term instability.

The winning strategy isn’t to evade restrictions but to innovate within them through synthetic data, federated learning, differential privacy, and above all, trust-centric design. Businesses that treat data privacy as a feature, not a barrier, will outpace competitors. In the coming decade, the companies that succeed with AI will be those that master the paradox: creating intelligent, personalized experiences without ever crossing the legal and ethical line.