In the age of generative AI, one truth lurks behind every flashy demo: it took a lot of trial and error to get there. Prompt engineering has become both a critical skill and a moving target. Whether you’re fine-tuning prompts for a chatbot, wrangling LLMs into writing clean code, or generating marketing copy, one pattern holds: consistency requires constant tweaking.

And guess what: that tweaking isn’t just a matter of polish, it’s often the difference between brilliance and breakdown. One misplaced word can derail logic, change tone, or introduce hallucinations. Unlike traditional programming, where outputs are deterministic, prompting is more like coaxing a performance from a very talented, slightly unpredictable actor. Mastery comes not from writing the perfect prompt once, but from understanding how and why the AI responds, then adjusting accordingly. In this article, you’ll explore:

- Why prompt engineering matters, and why flashy AI demos often hide hours of behind-the-scenes iteration.

- How prompt instability shows up in real use cases, from inconsistent tone to surprise hallucinations.

- The hidden complexity of working with LLMs, and why AI needs clear, structured coaching to behave.

- Common pitfalls that trip up even seasoned teams like brittle phrasing, overfitting, and prompt bloat.

- Where prompt engineering is headed, and why it’s evolving into full-fledged AI behavior design.

- How Mitrix turns prompt chaos into production-ready AI with modular architectures, scalable MLOps, and connectors that don’t break under pressure.

Why prompt engineering matters

Modern AI models, especially large language models (LLMs), are incredibly powerful, but also inherently stochastic. Ask them the same question twice, and you might get different answers. Change a word in your prompt, and you might unleash genius or gibberish. Prompt engineering is what allows us to steer the unpredictability into something usable.

While traditional programming relies on strict logic, prompting is more like giving instructions to an alien intern with a photographic memory, impeccable grammar, no common sense, and a tendency to confidently make things up when unsure.

Examples of prompt instability

Even the most carefully crafted prompts can produce wildly inconsistent results. That’s because large language and image models don’t just “follow instructions”: they interpret them probabilistically, influenced by training data, context length, and even model temperature. Here are a few ways this unpredictability shows up in the wild:

- Same prompt, different tone. Ask an AI to “write a professional email” and get a stiff memo one time and a cheerful note the next.

- Code generation inconsistencies. Provide a seemingly clear prompt for a Python function and get five different versions, each with subtly different logic.

- Image generation surprises. Slight tweaks in prompt phrasing can lead to radically different visuals or outputs that completely miss the mark.

This inconsistency forces prompt engineers to constantly iterate, test, and document their prompts.

The hidden complexity: why AI needs coaching

Large language models aren’t thinking: they’re guessing what comes next based on billions of examples. That means context, phrasing, and even punctuation can have outsized effects on output quality. Do you want consistent results? You’ll need to:

- Provide clear instructions (e.g., “Answer in bullet points”).

- Set tone and style (e.g., “Use formal business language”).

- Add framing context (e.g., “Imagine you’re an HR expert…”).

- Specify constraints (e.g., “No more than 100 words”).

- Define the format of the output (e.g., “Return as JSON”).

Even then, results vary across models, temperature settings, and model versions. You’re not just telling the AI what to do: you’re nudging it toward coherence, one token at a time.

Life of a prompt engineer in a nutshell

Think of prompt engineering as UX design for AI, meaning that you’re designing the conversation. And just like user interfaces, prompts evolve over time. Let’s say you’re building a generative AI tool for customer support. Your first prompt might look like: “Answer the customer’s question politely and clearly.”

But after dozens of inconsistent responses, you might revise it to: “You are a professional support agent for an e-commerce store. Always greet the user, summarize the issue, and provide a solution in three bullet points using clear, polite language.”

Now it works until the model gets updated or starts hallucinating again. So you add rules for refund policies. Then, a fallback phrase for when no solution is available. Then, a check for sensitive topics. And on it goes. The prompt becomes a living document, constantly adjusted as use cases shift, outputs drift, or models update. Welcome to prompt drift, folks!

Common pitfalls in prompt engineering

Prompt engineering may seem deceptively simple: just ask the model what you want, right? Well, not quite. Behind the scenes, even skilled teams encounter stumbling blocks that can derail consistency and reliability. Whether it’s trying to control every outcome or assuming the model shares your intuition, these common pitfalls can undermine even the best-designed prompts. Even experienced teams fall into traps like:

- Overfitting prompts. Making a prompt too specific can make it fragile or too narrow.

- Brittle logic. Prompts that rely on rigid phrasing often break when the model is updated.

- Missing instructions. Assuming the AI will “understand” your intent without explicit cues.

- Prompt bloat. Adding too much context or too many rules can confuse the model.

So what is the solution? Treat prompt engineering like software development: test, version, monitor, and refactor.

Tools of the trade

Staying consistent with generative AI can feel like trying to pin down a cloud. That’s why prompt engineers are increasingly leaning on dedicated tools and workflows to tame the chaos and turn prompting into a repeatable craft. These tools don’t just save time. Instead, they help bring structure, versioning, and sanity to what can otherwise be a high-friction process:

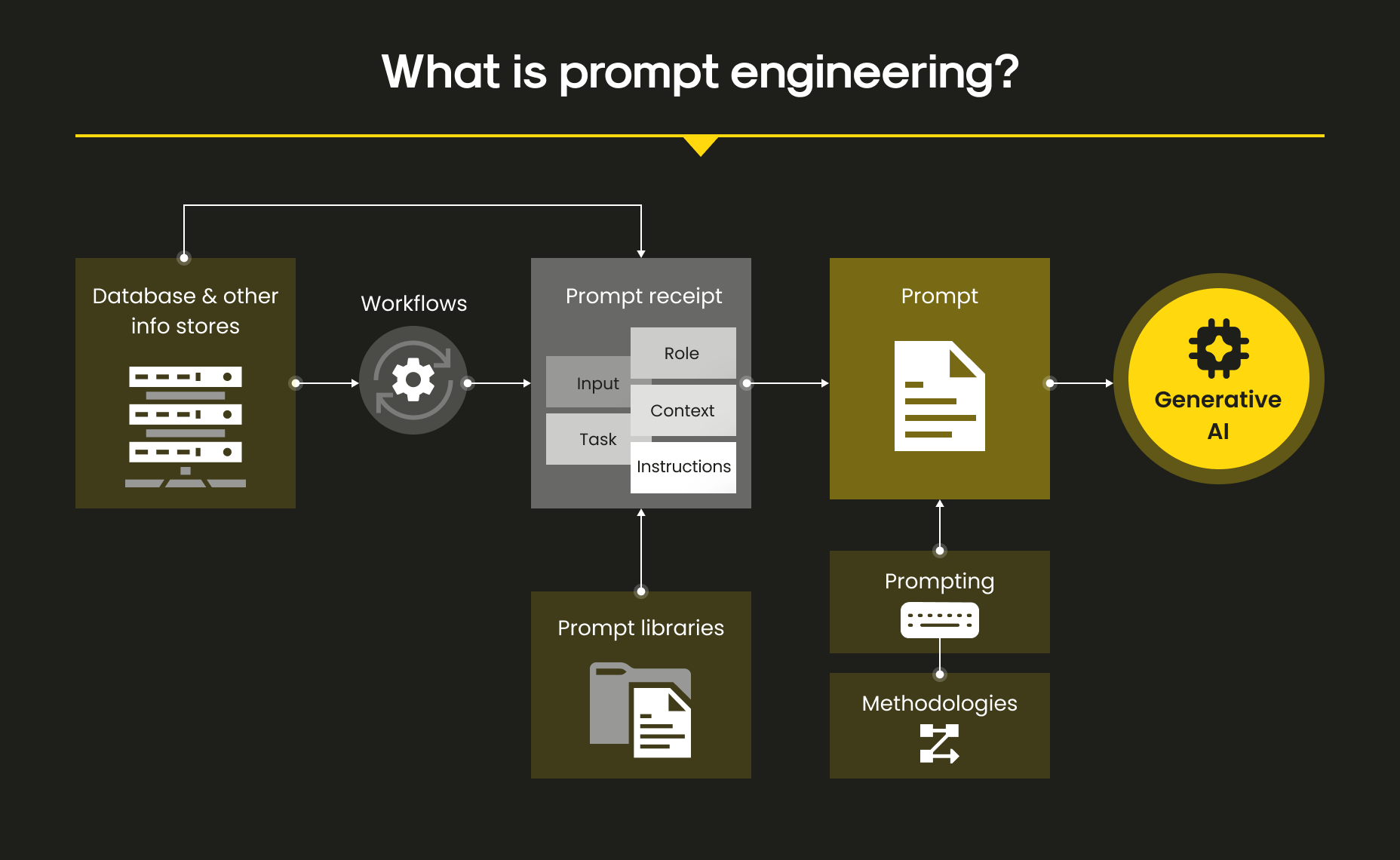

- Prompt libraries. Catalogs of tested prompts with use case tags and performance notes.

- Evaluation frameworks. Automated tools that score and benchmark outputs for quality, coherence, and accuracy.

- Playgrounds. Interfaces that allow engineers to experiment, tweak, and compare variations side by side.

- Version control. Yes, even for prompts. Tracking what worked, when, and why helps avoid repeating mistakes (and wasting hours).

Frameworks like Prompt Layer, LangChain’s prompt templates, and OpenAI’s Assistants API are emerging to help modularize and structure prompts like real code, because at this point, they practically are.

Pro tip

Treat your best prompts like assets, annotate them, and track their performance. Understand their failure modes. The more systematic your approach, the less time you’ll spend chasing ghosts in the output.

The ROI of great prompting

Do you think prompt engineering is a fad? Well, think again. As companies build products powered by LLMs, the difference between “good enough” and “production-ready” often comes down to prompt performance.

Effective prompting means:

- Less need for post-processing

- More reliable user experiences

- Lower compute costs (fewer retries or longer inputs)

- Faster development cycles

In enterprise contexts (say, legal tech, healthcare, or financial automation) where correctness matters, prompt reliability is not just a nice-to-have. It’s a compliance issue, a trust signal, and a business differentiator.

Pro tip: Track prompt performance like you would any other key metric. Monitor failure rates, latency, and revision churn. Pair qualitative reviews (does the output feel right?) with quantitative data (token count, retries, latency). Over time, even small gains in prompt efficiency can snowball into massive savings, especially at scale.

Future of prompt engineering

Some say fine-tuning and embeddings will replace prompts. Others say LLMs will get ‘smarter,’ so to speak, and become more capable of following instructions. Both may be true, but neither will kill the craft of prompting. Why?

The answer is simple: because human intent is fuzzy, and AI is literal. There will always be a gap to bridge, and that’s what prompt engineers do best.

Prompt engineering may evolve into something broader: AI behavior design. It won’t just be about tweaking inputs, but about shaping how AI systems interact with humans across contexts, goals, and values.

How Mitrix can help

At Mitrix, we offer AI/ML and generative AI development services to help businesses move faster, work smarter, and deliver more value. We help businesses go beyond proof-of-concept AI. We focus on building AI solutions that aren’t just viable, they’re valuable, trusted, and adopted.

Our team specializes in helping organizations break free from the integration quicksand. Our engineers design MLOps architectures that emphasize scalability, maintainability, and future-proofing, not just short-term fixes.

- Connector strategy and audit We start by analyzing your existing integrations and identifying where custom connectors are draining time and resources. Then, we recommend consolidation or replacement with standardized, low-maintenance alternatives.

- Modular, agent-based architecture Instead of one-off solutions, we build modular workflows using reusable components and event-driven communication. This reduces interdependency chaos and improves observability.

- Custom connectors done right When custom connectors are truly necessary, we build them with robust documentation, automated testing, and error monitoring to reduce downstream issues.

- End-to-end MLOps lifecycle support From data pipelines to model serving and governance, we help you implement MLOps best practices that scale with your business and stay sane under load.

With Mitrix, you don’t just survive the integration mess, but evolve past it. Let’s build AI workflows that work with you, not against you. Contact us today!

Final thoughts

All in all, prompt engineering isn’t magic: it’s a messy, experimental process of communicating with a probabilistic machine. And to get consistent results, you need more than a good idea. You need iteration, precision, and patience.

The tweaking never really stops. But that’s not a flaw, it’s the nature of prompt engineering. Those who embrace prompt engineering not just as a workaround, but as a core design discipline, will unlock the real power of generative AI.

Mastering prompt engineering means accepting the fuzziness of language (and the unpredictability of large language models) as part of the design space. It’s less about “solving” prompting and more about building systems that can adapt, learn, and improve alongside the models themselves. In a world where AI keeps evolving, so must the way we talk to it.