AI has graduated from buzzword to business backbone across industries. Companies use AI for powering virtual assistants, smart search, or recommendation engines, and this technology is quickly becoming the brain behind modern software. But here’s the twist: building a truly useful AI experience isn’t just about plugging in ChatGPT and hoping for the best. It’s about orchestrating a conversation between language models, data sources, APIs, and logic. That’s where LangChain comes in.

LangChain turns language models into agents capable of reasoning, fetching data, calling tools, and delivering contextual responses that feel intelligent. Picture this: if a large language model (LLM) is the “brain,” LangChain is the nervous system. Let’s explore how it works and what problems it solves, shall we? In this article, you’ll learn:

- Why LLMs alone aren’t enough for real-world software applications

- What LangChain is and how it bridges the gap between AI models and business logic

- The five core building blocks that make LangChain powerful (Prompts, Chains, Tools, Memory, RAG)

- How LangChain turns chatbots into smart and context-aware agents

- The practical benefits of using LangChain in support, finance, coding, and healthcare

- The tradeoffs to consider before diving in

- Why LangChain is becoming the standard toolset for AI-enabled applications in 2025

- And how Mitrix can help you build custom AI agents that actually do the job

What is LangChain?

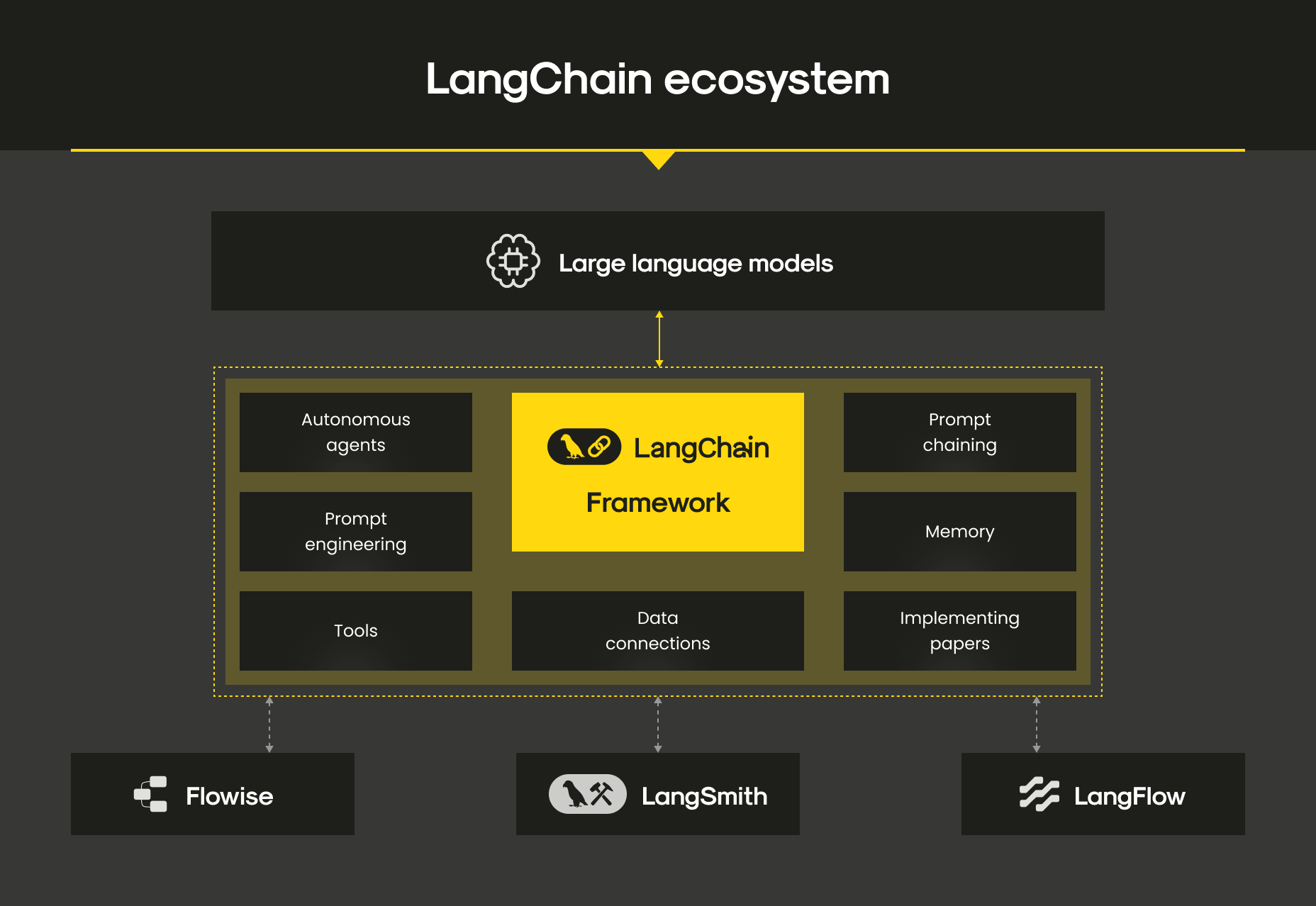

LangChain is an open-source framework designed to bridge the gap between LLMs and real-world applications. Think of it as middleware that connects your language model to memory, tools, APIs, and databases. It lets you build what’s called a “reasoning agent”, a model that doesn’t just respond but acts.

LangChain ecosystem

This framework gives developers the building blocks to create apps that use LLMs not just as answer machines, but as orchestrators that:

- Understand intent

- Decide what tools to use (e.g., a calculator, a SQL database, a web API)

- Execute those actions

- Use the results in a response

- Remember the conversation for next time

In short, LangChain lets you build the thinking layer of your software’s AI.

The problem: LLMs are smart, but not self-sufficient

As of today, large language models like GPT-4 are powerful at understanding and generating human language, but they don’t come with memory, access to real-time data, or the ability to interact with external systems. For instance, if you ask GPT-4 to check your database for recent orders, it won’t be able to comply due to its lack of direct access to external systems.

This is a critical gap in real-world software. Nowadays, businesses don’t need generic chatbots; they need AI that can:

- Remember previous user actions

- Pull in live data from APIs or documents

- Chain multiple reasoning steps together

- Execute custom logic like calling APIs or querying SQL

Without a framework to integrate all of that, you’re left with an autocomplete. So what can you do about it? Enter LangChain.

Core building blocks: how LangChain works

LangChain’s power lies in how it composes multiple tools into a cohesive system. Here are the key components:

1. Prompt templates

LangChain helps standardize and manage prompts for LLMs. Instead of hardcoding messy string templates, you can define modular, reusable prompts with variables. This improves clarity and makes versioning prompts much easier, which is essential for debugging or tuning.

2. Chains

This is where the LangChain’s name comes from: chains are sequences of operations. For example, you might:

- Take a user’s question

- Parse it to extract the intent

- Run a search query in a database

- Format the results

- Feed everything back into the LLM for a final answer

LangChain lets you create these step-by-step flows declaratively, handling both data and logic transitions cleanly.

3. Tools and agents

Tools are external functions the LLM can call, like a weather API, a Python function, or a database query. Agents are what make the decision to use them.

LangChain’s agent framework is where things get spicy: it lets the LLM figure out which tool to use, in what order, and how to process the results. It’s like giving your AI a Swiss Army knife if you want an analogy.

4. Memory

One-shot responses are fine, but real applications often need persistence. LangChain provides memory modules to store and recall previous interactions, enabling ongoing context. This is essential for multi-turn conversations, long-form reasoning, or simply remembering that the user prefers metric over imperial units.

5. Retrieval (RAG)

LangChain supports Retrieval-Augmented Generation (RAG), a technique where the model first fetches relevant data from an external source (e.g., a document store) before generating a response. This is how AI chatbots can give you answers based on your internal PDFs, knowledge bases, or support docs without training a new model.

Practical example: AI-powered customer support

Let’s say you want to build a smart support bot for an e-commerce site.

Without LangChain:

You connect your chatbot UI to GPT-4. It can answer general questions like “What is your return policy?” but struggles with tasks such as:

- “Where’s my order?”

- “Can you cancel item #123?”

- “Has my refund been processed?”

Unfortunately, the model can’t query your backend systems, and it forgets what the user said three messages ago.

With LangChain:

- The bot uses RAG to fetch return policy content from your docs

- It queries your order database using an SQL tool

- It remembers the user’s order ID from earlier in the conversation

- It decides whether to call the cancellation API

- It replies with a human-like explanation

In other words, now your support bot can handle real tasks effectively.

Overall, LangChain transforms a generic chatbot into a task-capable support assistant. Connecting the language model to data sources, tools, and memory, it enables your bot to understand, act, and respond with context, giving users fast, accurate, and helpful support without human intervention.

LangChain vs. alternatives: why not just code it all yourself?

Sure thing, you could wire up all these components manually: build prompt logic, write wrappers around APIs, manage session memory, and chain it all together. But doing so adds significant complexity and overhead.

LangChain simplifies this process, allowing you to focus on business logic instead of infrastructure. It’s not a black box; it’s flexible, transparent, and composable. Plus, it plays well with tools like:

- OpenAI, Anthropic, Mistral, or custom LLMs

- Pinecone, Weaviate, and other vector databases

- SQL, REST APIs, local functions, you name it

All things considered, the time saved on infrastructure alone makes the investment worthwhile.

Use cases where LangChain shines

LangChain is particularly useful in apps where context, memory, and tool use are essential. For example:

- Enterprise search. Build AI assistants that don’t just keyword-match. Instead, they understand the question, pull relevant information from internal documents, and deliver clear, accurate answers in plain English. This boosts employee productivity and reduces time spent digging through files.

- Finance automation. Let agents analyze financial statements, run calculations, and generate custom reports. With LangChain, you can create tools that reduce manual processing, minimize human error, and provide real-time insights for decision-making.

- Coding assistants. Go beyond autocomplete. LangChain enables LLMs to fetch documentation, write and execute code snippets, and help developers debug step-by-step, so you save hours of development time and accelerate project delivery.

- Healthcare tools. AI agents built with LangChain can summarize patient histories, pull in relevant research, and assist with clinical decision support. In other words, you deliver better-informed practitioners, streamlined workflows, and improved patient outcomes.

Anywhere a chatbot or assistant needs to think before speaking, LangChain fits. If your product needs an AI assistant that can reason, act, and adapt, go for LangChain and turn language models into capable digital coworkers, not just chatbots.

The tradeoffs: is there a catch?

LangChain is powerful, but it’s not always plug-and-play. It comes with complexity, especially when building agents. Some caveats include:

- Steeper learning curve. Compared to calling an LLM endpoint, there’s more architecture to consider.

- Debugging. Multi-step chains and agents can behave unpredictably. You’ll need logging and observability tools.

- Latency. Chaining tools and external calls introduce a delay. You’ll need to optimize for performance.

However, if your use case is beyond “just a chatbot,” the tradeoff is usually worth it.

Beyond 2025: LangChain + multi-agent systems

LangChain is rapidly evolving toward supporting multi-agent environments where multiple AI agents collaborate to solve tasks. Just imagine: one agent plans a project, another writes the code, and a third runs tests. It’s still early days, but LangChain’s architecture is already aligned for this kind of orchestration. That means you’re not just building an AI “brain.” You’re laying the foundation for a whole AI-powered team.

As of today, LangChain brings several advantages to multi-agent AI systems:

- Modularity. Easily define roles, tools, and tasks for each agent without monolithic code.

- Coordination. Agents can share context, delegate tasks, and combine outputs through structured chains.

- Scalability. As needs grow, you can introduce more specialized agents without rewriting your whole logic.

- Transparency. Clear logging and agent reasoning paths make the system debuggable and auditable.

- Tool interoperability. Agents can independently access APIs, databases, or even other AI models.

How Mitrix can help

At Mitrix, we offer AI/ML and generative AI development services to help businesses move faster, work smarter, and deliver more value. Our team builds AI agents designed around your needs, whether you’re looking to boost customer support, unlock insights from data, or streamline operations. Let’s create a robust AI agent tailored to transform your business!

Customer support agent

Delivers 24/7 customer assistance, resolves inquiries efficiently, addresses issues, and enhances overall customer satisfaction.

Healthcare assistant

Performs preliminary symptom assessments, organizes patient records, and provides accurate medical information.

Financial advisor

Delivers tailored investment advice, monitors market trends, and creates personalized financial plans.

Sales agent

Identifies and qualifies leads, streamlines sales processes, and drives growth by strengthening the sales pipeline.

Data analysis agent

Processes and interprets large datasets, delivers actionable real-time insights, and aids in strategic decision-making.

Virtual assistant

Organizes schedules, manages tasks, and provides timely reminders to enhance productivity.

Our expertise across business domains enables us to develop AI solutions aligned with your business model and goals. From managing inquiries to delivering personalized experiences, our agents build stronger relationships while improving response times and satisfaction. We provide:

- Custom AI agent design and integration

- AI agent strategy consulting

- Task automation and optimization

- Security, compliance, and ongoing support

Contact us today to discuss your AI solution!

Wrapping up

All in all, LangChain is more than just a developer tool; it’s a mindset shift. It reframes the way we think about integrating AI into software: not as a single-shot magic box, but as a reasoning agent that coordinates memory, logic, and data to produce something useful.

This way, if you want to build an AI that feels smart (and not just sounds smart), LangChain is what makes the difference between a clever chatbot and a competent assistant. It doesn’t replace the brain, but it gives it a body to act in the world.

By combining flexibility with structure, LangChain empowers developers to move beyond prototypes and build production-grade AI systems that adapt, interact, and evolve. As AI becomes embedded in more business-critical workflows, the ability to design thoughtful, tool-using, memory-aware agents won’t just be a nice-to-have: it’ll be a competitive edge.