As artificial intelligence continues to embed itself into the core of modern software, businesses face a new imperative: ensuring that AI systems are not just powerful, but also trustworthy. For developers, product owners, and decision-makers alike, trust hinges on reliability and explainability. Without these, even the most advanced AI models can sow confusion, misguide users, and expose companies to ethical and operational risks. In this article, you’ll discover:

- Why trust is the foundation of successful AI adoption

- How to design AI workflows that are resilient, auditable, and human-understandable

- What makes AI systems reliable in production, from data checks to fallback mechanisms

- How to implement layered explainability across models, predictions, and entire pipelines

- Why balancing performance with interpretability is essential, and how hybrid approaches help

- How cross-functional teams can collaborate to build trust

- What it takes to turn responsible AI into a competitive advantage, internally and in the market

- How Mitrix helps businesses operationalize AI with trustworthy architectures, RAG pipelines, and user-first design

The growing need for trust in AI workflows

In traditional software development, trust is built on deterministic logic. Code behaves the way it’s written. But in AI systems, especially those using machine learning or large language models (LLMs), outcomes are probabilistic. The same input can produce slightly different results, and decision-making often happens within a black box.

This unpredictability is a major concern when AI is used in high-stakes applications – healthcare, finance, recruitment, or legal compliance. Even in customer-facing use cases like chatbots or personalization engines, an unreliable or inexplicable output can harm user experience and brand trust.

To mitigate these risks, organizations must shift from building models in isolation to creating robust AI workflows, end-to-end systems that control, monitor, and explain AI behavior within a broader software architecture.

Defining reliable AI workflows

What does “reliable” mean in AI?

Reliability in AI refers to the model’s ability to consistently produce accurate, stable, and appropriate results in different contexts and over time. It also encompasses resilience, meaning how well the system handles data drift, adversarial inputs, or unexpected edge cases.

A reliable AI workflow includes:

- Data integrity checks. Ensuring training and inference data is accurate, relevant, and complete.

- Model validation and monitoring. Using real-world testing to measure performance across edge cases and long-tail scenarios.

- Fallback mechanisms. Including human-in-the-loop designs or rule-based overrides for critical decisions.

- Version control and reproducibility. Keeping track of model versions, training data, and hyperparameters for reliable deployment and debugging.

Integrating reliability into development

To build reliable AI workflows, software teams should:

- Shift left on AI quality. Don’t wait until deployment to test for reliability. Integrate testing early, just like you would for UI or API behavior.

- Adopt MLOps best practices. Version control, CI/CD pipelines for models, automated retraining, and monitoring must be as rigorous as in traditional DevOps.

- Validate across diverse datasets. Models should be evaluated not just on accuracy but also fairness, bias, and robustness metrics.

Reliability isn’t a one-time checkbox, it’s a continuous effort that spans the model lifecycle.

Why explainability matters

If reliability is about consistent behavior, explainability is about making that behavior understandable. Imagine a predictive maintenance tool suggesting a critical system be shut down. Without an explanation, the user may ignore the alert – or worse, blindly trust a flawed prediction. Explainability helps humans interpret AI decisions, challenge incorrect assumptions, and make better-informed choices.

In regulated industries, explainability isn’t just nice to have, it’s legally required. Under GDPR and other frameworks, users have a “right to explanation” when decisions affect them.

Layers of explainability

Explainability can be implemented at several layers in an AI workflow:

1. Model-level explainability

This focuses on understanding how the AI model works. For interpretable models (e.g., decision trees, linear regression), this is straightforward. For black-box models (e.g., deep neural networks or LLMs), techniques like SHAP, LIME, or integrated gradients can reveal which features influenced the output.

2. Prediction-level explainability

Here, the system provides just-in-time insights into why a specific decision was made. For example, a fraud detection model might say: “This transaction was flagged because it’s unusually high value and from a new location.”

3. Workflow-level explainability

This includes transparency around the entire AI pipeline: what data was used, how it was cleaned, what thresholds were applied, and how uncertainty is managed. Think of it as documenting the “chain of reasoning” behind every output.

Building explainability into software workflows

Design for human understanding

Explainability tools are only useful if they speak the user’s language. Tailor explanations based on the audience:

- Engineers need low-level insight into feature weights or token attributions.

- Executives need outcome summaries and risk indicators.

- End users need clear, concise explanations that help guide their next action.

Use visualizations, tooltips, or plain-language reports to present model reasoning in a digestible way.

Include audit trails

Track every step in your AI pipeline (from raw input to final output ) and store metadata for future reference. This is especially critical in sectors where decisions may later be challenged in court or during audits.

Test for faithfulness

An explanation is only valuable if it accurately reflects the model’s reasoning. Be wary of “plausible-sounding” explanations that gloss over model complexity. Faithfulness testing ensures that your explanations aren’t just user-friendly, they’re truthful.

Balancing performance with trust

In 2025, one of the core tensions in AI development is the trade-off between performance and interpretability. Complex models like transformer-based LLMs often outperform simpler ones, but they’re harder to explain. This creates a dilemma for engineering teams: should you sacrifice explainability for raw power?

The answer depends on the use case. For safety-critical or regulated environments, transparency must take precedence. For creative or exploratory applications, a “black box” might be acceptable, so long as it’s monitored and constrained.

Hybrid approaches are emerging to bridge the gap. For example:

- Distillation. Using an interpretable model to approximate the predictions of a complex one.

- Rule-based wrappers. Applying business logic filters on top of probabilistic models.

- Agent architectures. Designing AI workflows as modular agents where each component can be logged, explained, and audited independently.

Pro tip

When building AI systems, don’t treat performance and trust as mutually exclusive. Instead of choosing one over the other, aim to design for layered transparency:

- Start with explainability by default in domains where trust is non-negotiable like healthcare, finance, or legal tech.

- Use monitoring tools to track and validate predictions from black-box models in real-time.

- Build modular workflows using agent architectures to isolate, audit, and improve individual steps.

- Document decisions made during model selection and training, so both technical and non-technical stakeholders can understand the “why” behind the system.

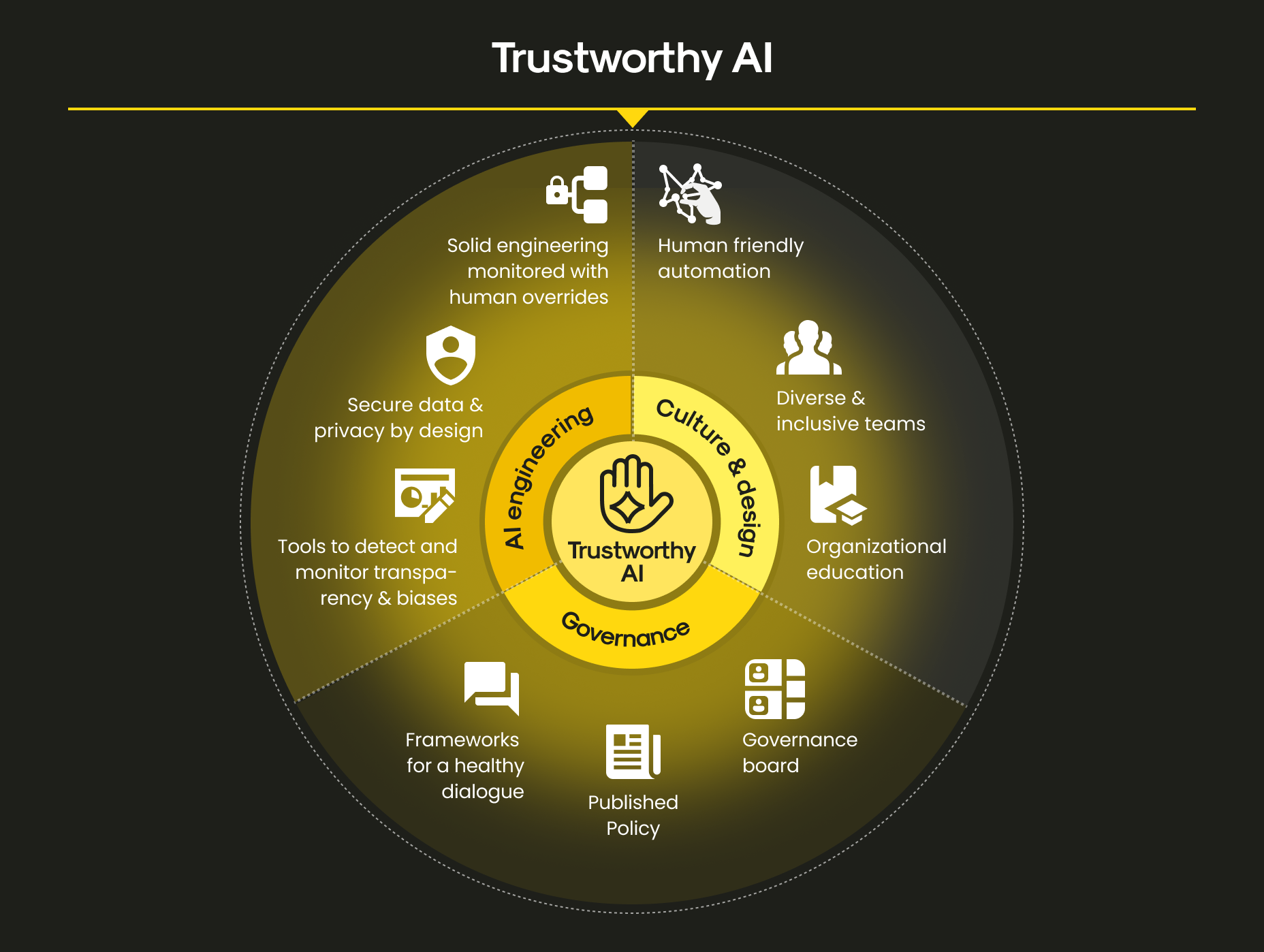

Building trust is a team sport

Creating reliable and explainable AI workflows isn’t just a technical challenge, it’s an organizational one. Success depends on collaboration across roles:

- Data scientists must build models with trust in mind, not just accuracy.

- Software engineers must integrate monitoring, fallback, and explanation systems into production code.

- Product managers must consider explainability in UX design and customer communication.

- Compliance teams must assess legal and ethical implications early in the development cycle.

This interdisciplinary effort is best guided by AI Centers of Excellence (CoEs) – is a cross-functional team dedicated to defining standards, sharing best practices, and overseeing governance.

Responsible AI as a competitive advantage

Ultimately, trust isn’t just about compliance or ethics, it’s a competitive advantage. As users become more informed and skeptical of AI-driven tools, companies that can demonstrate transparency, reliability, and fairness will win market share.

Trust also accelerates internal adoption. Teams are far more likely to embrace AI tools when they understand how they work and feel confident in their consistency. It reduces resistance to change and fosters a culture of innovation. In turn, this paves the way for faster experimentation, better decision-making, and stronger business outcomes.

How Mitrix can help

At Mitrix, we offer AI/ML and generative AI development services to help businesses move faster, work smarter, and deliver more value. We help businesses go beyond proof-of-concept AI. We focus on building AI solutions that aren’t just viable, they’re valuable, trusted, and adopted.

We don’t just build models. Our team builds intelligent systems that work where it matters most: in your day-to-day operations, with your real users, under real constraints.

Our approach to AI development includes:

- Persistent context and memory design

- RAG systems that blend LLMs with real-time data

- Session-aware UX and interaction design

- Monitoring tools for drift, feedback, and behavior over time

Whether you’re building a customer support agent, healthcare assistant, or analytics copilot, we help you avoid context collapse, so your AI performs predictably, safely, and effectively. Fair enough, AI isn’t magic. But with the right architecture and guardrails, it can feel like it is. Do you need help architecting AI systems that keep their head in the game? Let’s talk!

Summing up

As AI becomes embedded in every layer of the software stack, trust will be the cornerstone of successful adoption. That trust must be earned through systems that are not only high-performing, but also reliable and explainable.

For businesses building AI-powered solutions, the message is clear: don’t just develop smarter models, but develop smarter workflows. Create systems that users can rely on, question, and understand. Because in the age of intelligent software, transparency isn’t optional, it’s your license to operate.