In the age of AI-driven everything, integrating machine learning into production is a business imperative. MLOps (Machine Learning Operations) promised to industrialize the deployment of ML models. It promised automation and scale. But for many companies, the reality has been, well, stickier.

The hidden challenge in machine learning lies not in training the model, but in everything that surrounds it. The real complexity lies beneath the surface: in the intricate web of custom connectors, pipelines, microservices, data validation scripts, and integration code hastily assembled to support deployment. In this article, you’ll discover:

- What the “integration death” phenomena means and why it’s one of the most overlooked threats in MLOps today

- The five key reasons why custom connectors become long-term liabilities instead of short-term solutions

- A strategic roadmap to escape the integration death spiral, covering standards, observability, automation, and more

- Why “composable MLOps” is emerging as the new gold standard for scalable, maintainable ML systems

- Pro tips for future-proofing your ML stack, even when engineers rotate out or systems evolve

- How Mitrix helps organizations break free from custom connector chaos and build MLOps architectures that scale, adapt, and deliver lasting value

What is integration death?

Integration death occurs when the lifecycle of a machine learning system is driven less by innovation or business value and more by the ongoing maintenance of fragile, interdependent components. Each update (whether it’s a new model version, an API schema adjustment, or an infrastructure change) can destabilize the entire architecture.

In many cases, this architecture was assembled under pressure by a single overextended engineer, stitching together a Snowflake data warehouse, a Kafka stream, a custom Flask application, a monitoring dashboard, and a retraining pipeline using ad hoc code. Besides, more often than not, documentation is often sparse or nonexistent. As for reliability, well, continued functionality is more a matter of luck than design.

MLOps: dream vs. reality

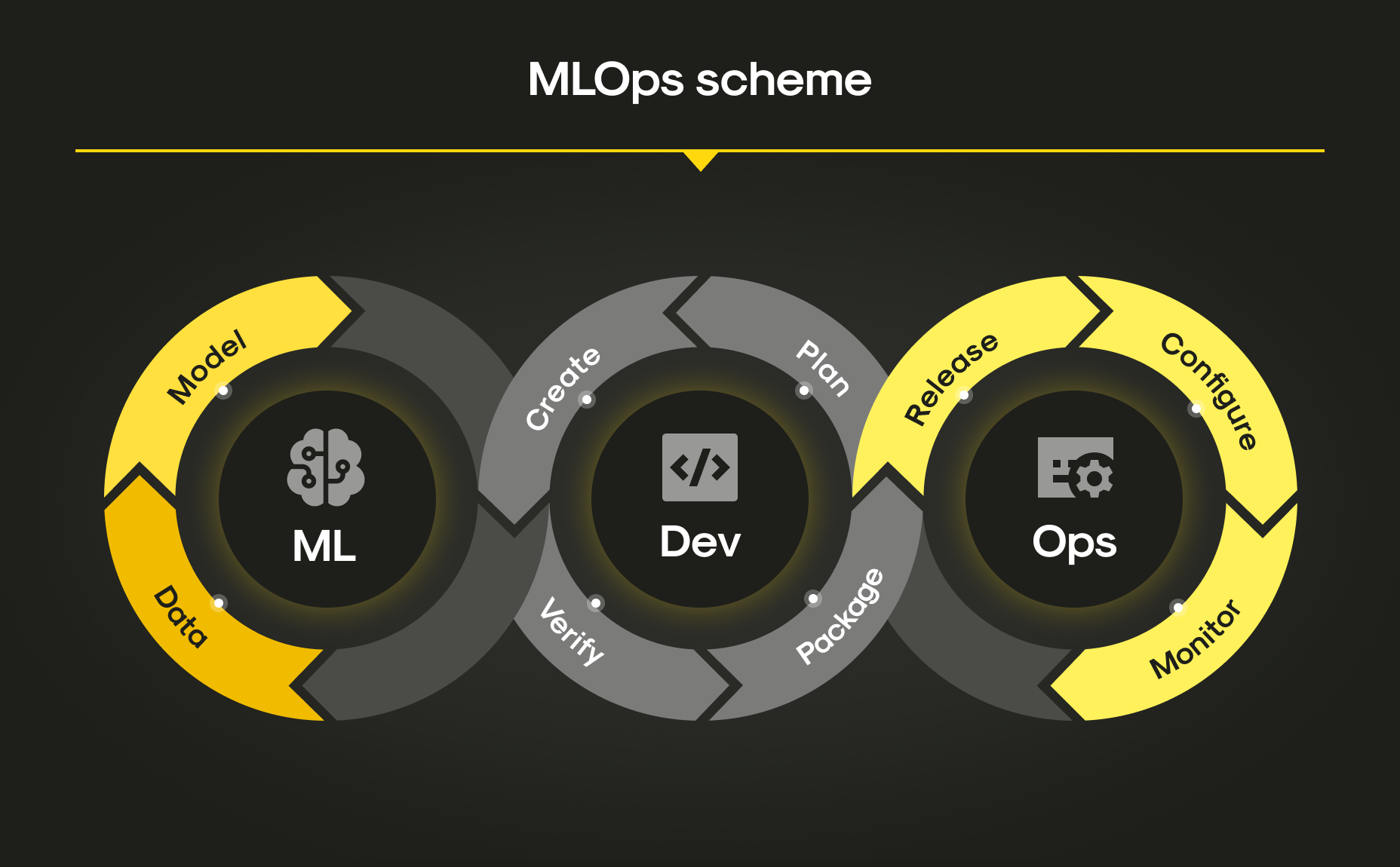

MLOps scheme

When companies invest in MLOps, they often envision a future of elegant automation and smooth scalability. It’s the promise of industrialized machine learning: streamlined processes, reproducibility, and confidence in every deployment. But reality tends to be harsh.

The dream:

- One-click deployments

- Scalable pipelines

- Model versioning with rollback

- Reusable components

- Automated monitoring and alerting

The reality:

- A dozen Python scripts running in cron jobs

- Homegrown APIs that don’t follow any spec

- Teams afraid to update TensorFlow versions

- Data scientists manually triggering jobs from Slack

- Production models depending on a YAML file named temp-final-v3-new-fix.yaml

The outcome

Unfortunately, this isn’t just technical debt, but rather an integration quicksand. To escape this downward spiral, organizations must treat integration as a first-class citizen of MLOps. That means investing in composable architectures, enforcing interface standards, and planning for change as a constant. Because in the end, sustainable machine learning is all about building systems that can adapt, scale, and endure.

Why custom connectors become maintenance nightmares

Custom connectors (aka the adapters and middleware that help disparate systems talk to each other) start with good intentions. They’re quick to write, seemingly harmless, and solve an immediate need. But over time, they metastasize. Here’s why they’re so dangerous:

1. They scale poorly

Let’s be real: one custom connector is manageable and ten are tolerable. But what about fifty? Now you’re spending more time patching integrations than training models. Each additional connector increases the complexity of your stack geometrically.

2. They’re tribal knowledge traps

Most custom connectors are written by one person, for one use case, with one kind of data in mind. When that person leaves (or simply forgets what they wrote), the connector becomes a black box. This way, reverse-engineering that box becomes the team’s next hackathon.

3. Version drift

Nowadays, APIs evolve, authentication mechanisms change, and data formats shift. But most custom connectors are built for the now, not the later. Over time, connectors break silently or behave unpredictably, forcing constant firefighting.

4. They lack observability

Unfortunately, custom glue code rarely includes proper logging, metrics, or error handling. When something breaks, good luck figuring out where, why, or when, especially when the incident alert just says “Model output missing.”

5. Security and compliance risks

So what about that quick fix you wrote two quarters ago? It might be sending sensitive data to an external service without encryption. Now multiply that risk by every connector you’ve built, and it becomes clear: you need a security audit.

Case study: from quick fix to ongoing crisis

Consider a fictional company that, say, provides an e-commerce analytics platform. Their ML team rolled out a product recommendation model connected to their existing infrastructure via custom scripts:

- A Python connector pulled purchase history from a PostgreSQL database.

- Another pushed recommendations into their web platform via a REST API.

- A third retrieved user activity from an S3 bucket for weekly retraining.

You might be wondering: “So what about the results?” Well, all of this worked for about two months. Then PostgreSQL was upgraded, the API schema changed, and the S3 access keys expired. Besides, the engineer who wrote the scripts transferred teams. The company spent weeks fixing connectors instead of improving their model. Eventually, management paused all new ML initiatives until the existing pipelines could be “hardened.”

How to escape the integration death spiral

Realistically, some integrations are unavoidable. But you can design your MLOps stack to reduce fragility, improve maintainability, and regain control. Here’s how:

1. Adopt standards, not just speed

- Use open, versioned protocols like OpenAPI for APIs and Parquet/Arrow for data.

- Favor tools and frameworks with strong community support and plugin ecosystems (e.g., MLflow, Feast, Tecton, Kubeflow).

- Apply Data Contracts to enforce schema consistency across teams.

2. Invest in abstractions

- Instead of one-off connectors, build reusable integration layers (e.g., internal SDKs or wrapper libraries).

- Containerize data ingestion and model serving components for modular updates.

3. Make observability non-negotiable

- Bake in logging, tracing, and monitoring from the start.

- Use centralized tools like Grafana and OpenTelemetry to track pipeline health.

4. Set a connector lifecycle policy

- Treat custom connectors like any other product: version them, document them, test them.

- Sunset old connectors systematically.

- Rotate connector maintainers to prevent knowledge silos.

5. Automate everything you can

- CI/CD pipelines should cover not just models, but data validation, dependency updates, and test coverage.

- Tools like DVC or Dagster can track data lineage and ensure reproducibility.

6. Embed DevOps into ML teams

- Cross-functional teams reduce integration guesswork.

- Teach data scientists about CI/CD, and teach DevOps teams about ML lifecycle needs.

The rise of “composable MLOps”

As the field matures, many are shifting from ad hoc glue to composable MLOps where tools are selected like Lego bricks, each with a clear contract, monitoring layer, and integration interface. This includes:

- Feature stores that standardize access to training and inference data

- Model registries that track versions, metrics, and deployment readiness

- Orchestration frameworks (like Airflow or Prefect) that control pipeline execution

- Agent-based architectures where components communicate through events or APIs, reducing tight coupling

Composable MLOps means less time chasing bugs and more time shipping value.

Pro tip: build for rotation

Design every component with the assumption that the person who built it will be gone in six months. That means:

- Write docstrings and README files.

- Build health checks into connectors.

- Use linter and pre-commit hooks.

- Don’t rely on “Why bother, Alex said this was safe” as a deployment policy.

How Mitrix can help

At Mitrix, we offer AI/ML and generative AI development services to help businesses move faster, work smarter, and deliver more value. We help businesses go beyond proof-of-concept AI. We focus on building AI solutions that aren’t just viable, they’re valuable, trusted, and adopted.

Our team specializes in helping organizations break free from the integration quicksand. Our engineers design MLOps architectures that emphasize scalability, maintainability, and future-proofing, not just short-term fixes.

- Connector strategy and audit We start by analyzing your existing integrations and identifying where custom connectors are draining time and resources. Then, we recommend consolidation or replacement with standardized, low-maintenance alternatives.

- Modular, agent-based architecture Instead of one-off solutions, we build modular workflows using reusable components and event-driven communication. This reduces interdependency chaos and improves observability.

- Custom connectors done right When custom connectors are truly necessary, we build them with robust documentation, automated testing, and error monitoring to reduce downstream issues.

- End-to-end MLOps lifecycle support From data pipelines to model serving and governance, we help you implement MLOps best practices that scale with your business and stay sane under load.

With Mitrix, you don’t just survive the integration mess, but evolve past it. Let’s build AI workflows that work with you, not against you. Contact us today!

Wrapping up

Machine learning should serve as a catalyst for business progress, not burden engineers with the continual maintenance of unstable and ad hoc system integrations. As powerful as MLOps is, its true potential is only unlocked when integration is an enabler, not a liability. Avoid the trap of custom connector chaos: prioritize composability, enforce standards, and build for scale. And above all, remember: if you need a diagram just to explain your ML stack to a new hire, it might be time to simplify.

When complexity starts slowing you down instead of speeding you up, it’s a signal to re-evaluate. Mature MLOps is about having a reliable, maintainable, and well-understood one. That means choosing tools that play well together, documenting decisions, and resisting the temptation to over-engineer every edge case. In the long run, simplicity scales better than heroics.