It’s fair to say that the past few years have reshaped natural language processing (NLP) and AI‑driven applications. Nowadays, businesses face a pivotal question: “What’s the best way to harness large language models (LLMs) for their specific needs?” Three primary approaches dominate the landscape: LLM fine‑tuning, retrieval‑augmented generation (RAG), and AI agents. Each has unique strengths, trade‑offs, and implications for cost, scalability, and control. The differences, use cases, and a practical LLM comparison are essential for decision-makers seeking clarity. Key considerations include LLM leaderboard rankings, LLM benchmark performance, and best practices for LLM training, all of which guide informed choices. In this article, you’ll learn:

- Why LLM fine‑tuning, RAG, and AI agents matter for business AI.

- How fine‑tuning embeds expertise, ensures consistency, and supports compliance.

- How RAG delivers fresh, explainable insights without retraining.

- How agents automate multi-step workflows across systems.

- Cost, scalability, and hybrid strategies for real-world adoption.

- Practical steps for piloting, benchmarking, and scaling anything LLM.

- How Mitrix helps implement tailored LLM solutions with AI agents, fine-tuning, and RAG.

Why the comparison matters

Naturally, not all AI problems need the same solution. Some demand custom expertise embedded in the model (fine‑tuning). Others rely on real‑time access to fresh or proprietary data (RAG). And increasingly, enterprises deploy multi‑step AI agents that combine reasoning, planning, and tool use. Without a clear framework, teams risk over-engineering or under-delivering. That’s where a practical comparison helps. It sharpens decision-making by matching solutions to real business needs. This practice also prevents wasted investment in approaches that don’t scale or align with long-term goals.

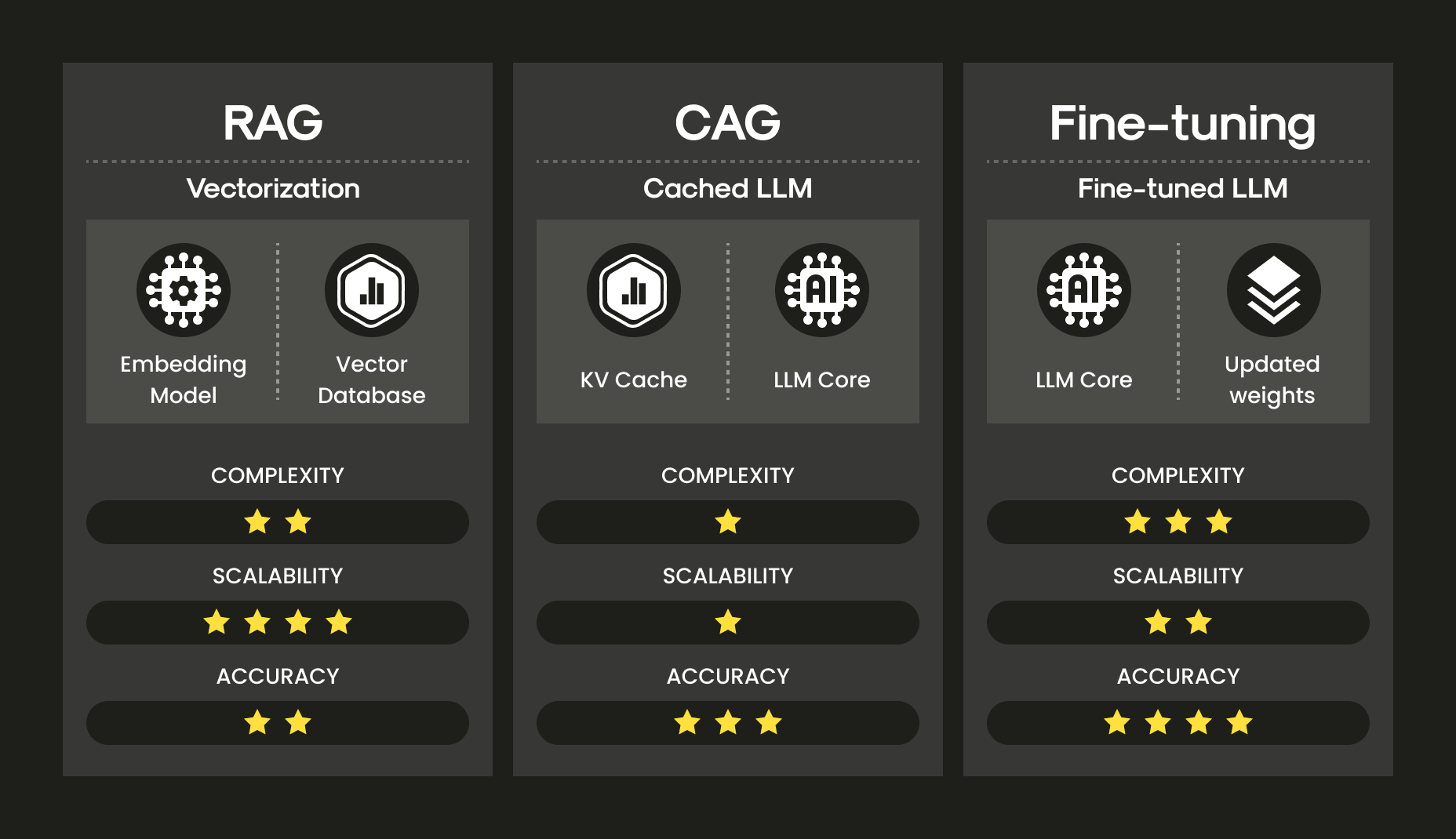

RAG/CAG/Fine-tuning comparison

Key takeaway

Fine-tuning, RAG, and agents each solve different categories of problems, and no single method is a universal answer. Fine-tuning shines when domain expertise must be baked into the model itself. RAG ensures real-time accuracy by grounding outputs in fresh or proprietary data. Agents excel when tasks demand multi-step reasoning and tool orchestration. The real value comes from knowing when to use each (or how to combine them) so that your AI strategy is efficient, scalable, and aligned with business objectives.

LLM fine‑tuning: embedding expertise into the model

Fine‑tuning refers to adjusting a pre‑trained model on a narrower dataset so that it better aligns with a company’s domain, style, or compliance needs.

Advantages

- Custom expertise. Embeds industry‑specific knowledge (e.g., legal, medical, or financial terminology).

- Consistency. Produces more predictable outputs for repetitive, structured tasks.

- Data security. Keeps sensitive workflows internal when paired with private LLM training pipelines.

Challenges

- Cost. Training and hosting fine‑tuned models can be expensive.

- Maintenance. Models can become stale if regulations or data shift.

- Complexity. Requires understanding of how to finetune LLM effectively.

Best practices

If you’re exploring how to fine‑tune LLM systems, start with:

- Curated datasets. Quality beats quantity: clean, representative samples matter most.

- Instruction tuning. Aligning outputs with human preferences.

- Benchmarking. Use an LLM leaderboard or LLM benchmark to measure progress against baselines.

Use cases

- Customer service with domain‑specific compliance.

- Contract analysis in law firms.

- Clinical decision support in healthcare.

Key takeaway

In a nutshell, LLM fine-tuning shines when stability, compliance, and domain expertise are non-negotiable. It embeds specialized knowledge directly into the model, delivers consistent outputs, and safeguards sensitive data, though at the cost of higher training and maintenance demands. For industries with strict requirements, it’s the most reliable way to align AI with business realities.

Retrieval‑augmented generation (RAG): injecting fresh knowledge

Unlike fine‑tuning, RAG doesn’t alter the base model. Instead, it enhances responses by retrieving documents from a knowledge base at query time. Think of it as “open‑book AI.”

Advantages

- Dynamic knowledge. Access to real‑time or frequently updated data.

- Lower cost. No need for repeated LLM training.

- Explainability. Ability to cite sources for improved trust.

Challenges

- Latency. Retrieval adds overhead.

- Quality control. Poorly indexed data equals poor results.

- Limited reasoning. The model still relies on external context stitching.

Best practices

- Use vector databases for efficient semantic search.

- Regularly update knowledge repositories.

- Benchmark retrieval quality against domain‑specific LLM comparison tests.

Use cases

- Enterprise search and knowledge management.

- Product support documentation.

- Financial research with real‑time market data.

Key takeaway

RAG excels when access to up-to-date information is critical. It keeps models dynamic, cost-efficient, and explainable, while avoiding repeated LLM training. However, performance depends on high-quality indexing, efficient retrieval, and careful monitoring to ensure accuracy and reliability.

AI agents: multi‑step reasoners and doers

While fine‑tuning and RAG improve single interactions, agents represent a different paradigm. They are systems that use LLMs as reasoning engines but also interact with tools, APIs, and other agents to complete multi‑step workflows.

Advantages

- Autonomy. Capable of executing complex tasks with minimal supervision.

- Flexibility. Can combine RAG, fine‑tuning, and external tools.

- Scalability. Extendable through modular architectures.

Challenges

- Reliability. Prone to “hallucinations” without guardrails.

- Governance. Harder to audit multi‑step reasoning.

- Engineering complexity. Requires orchestration frameworks.

Best practices

- Define clear boundaries for agent autonomy.

- Monitor workflows continuously.

- Leverage LLM benchmarks to evaluate reasoning performance.

Use cases

- Automated research assistants.

- Supply chain optimization.

- Customer onboarding journeys that require multiple systems.

Key takeaway

To cut a long story short, AI agents take LLM capabilities beyond single-turn answers by chaining reasoning with action. They offer autonomy, flexibility, and scalability, but come with reliability and governance challenges that require careful orchestration. Teams that define clear guardrails, monitor workflows, and ground performance with LLM benchmarks can unlock use cases from automated research to end-to-end customer journeys.

LLM leaderboards, benchmarks, and reality checks

In 2025, the surge of public LLM leaderboards has made it easier to track how different models perform across tasks. But remember: benchmarks are abstractions. They often emphasize math, reasoning, or academic QA tasks, which may not reflect your business context. When running an LLM comparison for your project:

- Use public LLM benchmarks as a starting point.

- Create internal benchmarks tied to your data.

- Test across scenarios like latency, compliance, and user satisfaction.

Benchmarks tell you how a model performs in theory, while pilots tell you how it performs in practice. Another limitation is that leaderboard results usually come from controlled test sets and don’t capture operational realities. For example, a model may rank high on reasoning tasks but struggle when integrated into workflows with strict latency requirements. Costs, availability of fine-tuning options, and integration complexity rarely make it into leaderboard summaries, yet they often determine real-world success. That’s why leading enterprises now build private benchmark suites to complement public results. These internal evaluations focus on KPIs that matter, such as conversion lift in a chatbot, error reduction in document parsing, or throughput in batch inference. By combining public metrics with custom testing, organizations gain a balanced view: what the model can do in theory, and what it will deliver in practice.

Cost and scalability: which approach wins?

Cost and scalability are often the deciding factors when choosing between fine-tuning, RAG, and agents. Each comes with its own trade-offs in terms of investment, maintenance, and long-term value. The right choice depends on whether your priority is efficiency, adaptability, or full automation.

- Fine-tuning. Higher upfront costs but scalable for repetitive, specialized use.

- RAG. Lower costs, highly dynamic, best when knowledge updates often.

- Agents. Potentially most expensive due to orchestration, but highest payoff for end‑to‑end automation.

In all fairness, there’s no single “winner” across all scenarios. Fine-tuning shines in high-volume, domain-specific workloads; RAG thrives where data changes quickly; and agents unlock new possibilities for orchestrated, multi-step tasks. The key is to align the approach with your business model, growth plans, and tolerance for complexity.

Key takeaway

In a nutshell, fine-tuning scales expertise, RAG scales knowledge, and agents scale workflows. Choosing wisely means balancing upfront cost with the value of long-term scalability.

How to choose: a decision matrix

Choosing between fine-tuning, RAG, and agents is about matching the right tool to the right problem. A clear decision matrix helps avoid over-engineering and ensures every solution directly supports business goals.

- If your domain knowledge is stable and compliance‑heavy. Invest in how to fine‑tune LLM pipelines.

- If your domain changes frequently or needs transparency. Adopt RAG.

- If your workflows are multi‑step and cross‑system. Build agents.

In fact, many businesses find hybrid models work best: fine‑tune for core tasks, RAG for freshness, and agents for orchestration. In practice, the smartest teams often combine approaches: fine-tuned models for specialized tasks, RAG for freshness and adaptability, and agents to stitch it all together. This layered strategy keeps AI both precise and flexible.

Key takeaway

A quick reminder: fine-tune for stability, RAG for adaptability, and agents for orchestration. Combining these approaches creates a hybrid AI strategy that balances precision, flexibility, and scalability. Fine-tuned models handle repetitive or compliance-heavy tasks reliably, RAG keeps knowledge up-to-date and transparent, and agents automate multi-step workflows across systems. This layered approach minimizes risks, reduces maintenance overhead, and maximizes business impact, ensuring AI solutions remain robust as requirements evolve.

Practical adoption steps

- Benchmark. Use an LLM leaderboard and your own metrics.

- Experiment. Run small pilots: RAG for FAQ systems, fine‑tuning for compliance workflows.

- Evaluate costs. Balance cloud compute, storage, and integration.

- Scale gradually. Introduce agents once reliability frameworks are in place.

The future: convergence of approaches

As of today, AI development is evolving toward integrated, multi-layered solutions rather than isolated tools. We’re already seeing platforms that blur the lines between methodologies: fine-tuned models that still leverage RAG for freshness, and agents that orchestrate fine-tuned models alongside RAG knowledge. This trend reflects a shift from choosing a single approach to designing hybrid systems that combine the strengths of each method. Businesses across industries are increasingly recognizing that there is no one-size-fits-all answer. The smartest teams mix and match based on business goals, regulatory constraints, data sensitivity, and budget considerations. By converging fine-tuning, RAG, and agents, organizations can achieve precision, adaptability, and automation in a single workflow. Looking ahead, this convergence promises AI systems that are more resilient, context-aware, and capable of delivering measurable business value across complex systems.

How Mitrix can help

At Mitrix, we deliver AI/ML and generative AI development services that help companies:

- Design intelligent bots that understand supply chain workflows

- Integrate chatbots with tracking systems, CRMs, ERPs, and TMSs

- Build multilingual, multi-channel support agents (WhatsApp, web, SMS)

- Monitor performance and apply machine learning for ongoing improvements

We also design end-to-end fine-tuning pipelines: selecting base models (LLM comparison), preparing data, running cost-efficient LLM training (LoRA & instruction tuning), and deploying with monitoring and compliance. We also benchmark-tuned models against public leaderboards and custom domain tests so you know the real business impact. We help companies turn the AI hype into practical outcomes:

- Build AI agents tailored to your workflows

- Integrate systems seamlessly across CRM, ERP, and support platforms

- Monitor performance and continuously improve AI outputs

- Ensure compliance, ethical standards, and reliable operation

Summing up

All in all, LLM fine‑tuning, RAG, and agents each solve different slices of the enterprise AI challenge:

- Fine‑tuning embeds expertise and consistency.

- RAG ensures freshness and explainability.

- Agents unlock orchestration and autonomy.

For leaders evaluating anything LLM, the path forward lies not in chasing leaderboard crowns but in aligning technical choices with strategic goals. Use LLM comparison frameworks, test against relevant LLM benchmarks, and understand the nuances of how to fine‑tune LLM or deploy RAG responsibly. The real winners will be those who combine these approaches into resilient, adaptive ecosystems.