From automating customer service to shaping executive decisions, artificial intelligence has become increasingly embedded into the fabric of businesses across industries. But with this shift comes a pressing responsibility: companies must treat AI not just as a tool, but as a decision-maker that reflects their values, priorities, and risk appetite.

This is where AI governance enters the conversation. But not as a standalone department or checkbox compliance measure. To be effective, AI governance must be deeply and organically integrated into corporate culture. Without this cultural integration, even the most sophisticated technical guardrails can be ignored, circumvented, or quietly deprecated. In this article, you’ll learn:

- Why AI governance is no longer optional in a world of rising regulation, public scrutiny, and rapid AI adoption

- How company culture can make or break governance efforts

- The five key cultural shifts that embed governance into day-to-day decision-making

- Practical steps for turning AI governance principles into consistent business practice

- How a governance-first culture creates competitive advantage and long-term trust

- Why governance isn’t a brake on innovation, but a catalyst for faster, safer, and more sustainable progress

- How Mitrix helps organizations design AI systems with compliance, ethics, and transparency baked in from day one

Why AI governance is no longer optional

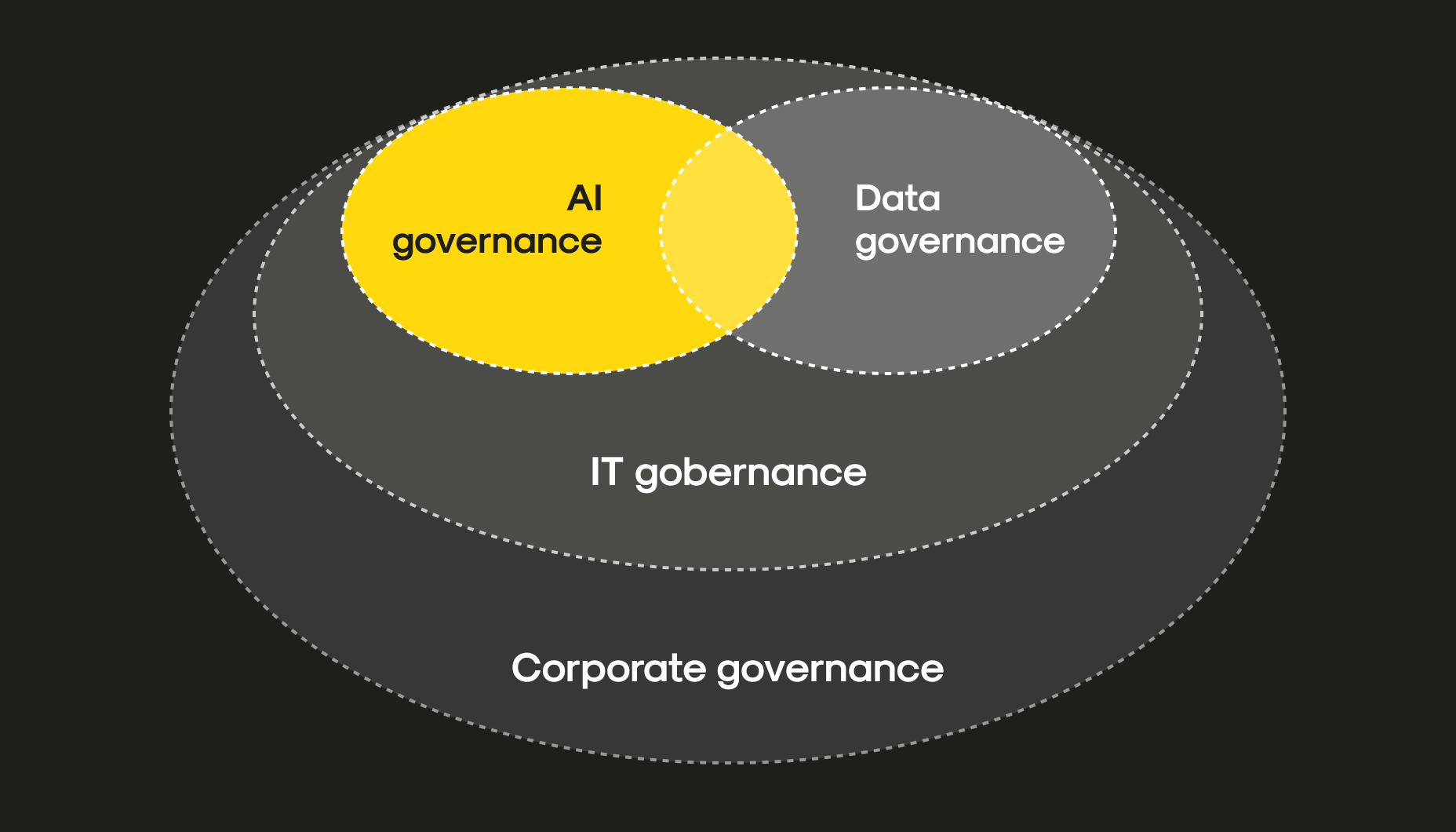

AI governance refers to the policies, processes, and practices that ensure AI systems operate reliably, ethically, and in alignment with an organization’s goals and values. It’s a discipline that encompasses data ethics, risk management, transparency, bias mitigation, regulatory compliance, and oversight.

As regulatory frameworks like the EU AI Act, Canada’s AIDA, and forthcoming U.S. guidelines gain traction, companies that treat AI governance as a cultural imperative (and not just a regulatory necessity) are better positioned to adapt, innovate responsibly, and retain trust.

Integrating AI governance into corporate culture

Integrating AI governance into corporate culture

More importantly, organizations face escalating public and internal scrutiny over how AI decisions are made. Customers are asking: “Was this recommendation made fairly?” Employees are wondering: “Can I trust this system to evaluate me?”, and upper management folks are asking: “Are we exposing ourselves to unnecessary legal or reputational risks?”

Culture shapes behavior

Well, it’s a cliché for a reason: culture shapes behavior more powerfully than rules. You can issue a mandate that every AI project must undergo an ethical review, but if project teams are incentivized to prioritize speed over scrutiny, the checklist becomes a mess. Conversely, if teams view responsible AI as a shared value and success metric, governance becomes embedded in their day-to-day decisions.

This is why successful AI governance isn’t imposed from the top-down or delegated to a siloed compliance team. It’s cultivated across all levels: leadership, product managers, engineers, marketers, and customer support reps. Each stakeholder plays a role in shaping how AI is built, deployed, and monitored.

5 cultural shifts to enable AI governance

To operationalize AI governance as a cultural norm, companies need more than frameworks. Instead, they need mindset shifts. Here are five:

1. From “Can we build it?” to “Should we build it?”

Encourage team members to question not just technical feasibility but societal and organizational impact. This means creating space for ethical inquiry at the ideation stage. A team building a predictive hiring tool should ask: “Will this system perpetuate bias?” before writing the first line of code.

Promoting this habit requires leadership endorsement. If the CEO or CTO openly supports pulling the plug on harmful or questionable AI use cases, it signals that responsibility isn’t a blocker, but a badge of integrity.

2. From risk avoidance to risk transparency

Most organizations are naturally risk-averse. When AI systems don’t work as intended, the instinct is to quietly patch the issue and move on. But opaque failures undermine long-term trust.

Instead, promote a culture of transparency around AI limitations. Encourage teams to document known issues, publish model cards, and communicate caveats to users. This practice only builds credibility.

3. From data ownership to data stewardship

AI systems are only as good (and ethical too) as the data they’re trained on. A culture of governance reframes data not as a resource to be exploited, but as a responsibility to be managed carefully.

Data scientists and business leaders alike should understand the provenance, quality, and bias of the datasets they use. Train teams to ask: Where did this data come from? Who does it represent or exclude? Are we respecting privacy, consent, and fairness?

4. From black-box pride to explainability mindset

In some engineering cultures, model performance is the holy grail, even if the model is completely uninterpretable. But governance-oriented cultures prioritize clarity. If a model can’t be explained, it can’t be trusted, audited, or debugged.

To shift this mindset, reward teams not just for predictive accuracy, but for clarity and reproducibility. Encourage the use of interpretable models when possible and ensure stakeholders understand how decisions are made.

5. From “AI team’s job” to “everyone’s responsibility”

Governance fails when it’s viewed as the AI team’s domain. Real integration means marketing, legal, HR, sales, and product teams all understand their role in shaping and overseeing AI use.

This requires cross-functional training, interdisciplinary collaboration, and shared vocabulary. Make governance part of onboarding, performance reviews, and team retrospectives. Normalize the idea that responsible AI is central to business success.

Embedding governance in practice

As a matter of fact, cultural shifts need anchors. Here’s how to reinforce AI governance across your organization:

Establish AI principles

Don’t just publish lofty mission statements. Create actionable AI principles that reflect your business values, like fairness, inclusivity, and human oversight, and make them a part of your daily operations. Review every new AI project against these principles and audit existing systems for alignment.

Create multidisciplinary review committees

Governance thrives in diverse perspectives. Establish internal review boards composed of ethicists, legal advisors, engineers, product leads, and even customers. Give them real authority to review, approve, or challenge AI initiatives before they scale.

Incentivize ethical behavior

It’s common industry knowledge: what you measure is what you prioritize. Add governance-related KPIs to team OKRs. Recognize and reward teams that go beyond compliance, those who proactively identify ethical risks or improve model fairness. Make it clear that doing the right thing is a career-advancing move, not a roadblock.

Build tools for governance-by-design

First things first: equip teams with toolkits that integrate governance into their workflows. This might include:

- Automated bias detection in model training

- Documentation templates for model decisions

- Governance checklists for product launches

- Dashboards tracking drift, errors, or usage anomalies

These tools make it easier for teams to adopt governance without extra burden, and send a message that responsibility is part of how work gets done.

Communicate internally and externally

Treat AI governance like a strategic differentiator. Share your practices with customers, regulators, and the public. Publish transparency reports, and be vocal about where you’re still learning.

Internally, make governance stories part of all-hands meetings, newsletters, or CEO memos. Highlight ethical dilemmas that were spotted early or project pivots that prioritized safety. These stories shape collective memory and reinforce culture.

The competitive advantage of a governance-first culture

In a marketplace where AI capabilities are rapidly commoditized, governance is emerging as a true differentiator. Two companies can deploy equally powerful models, but the one that can prove its systems are fair, transparent, and accountable will win the trust of customers, partners, and regulators. That trust compounds over time, and it opens doors to new markets, reducing compliance costs, and attracting higher-quality partnerships.

Forward-thinking organizations understand that governance ensures your AI initiatives stay aligned with strategic goals while avoiding the kind of ethical or legal missteps that can undo years of progress overnight. By embedding governance into your corporate DNA, you’re not just protecting your business, you’re future-proofing it.

Governance as an innovation catalyst

One of the most persistent misconceptions about AI governance is that it slows innovation. In reality, the opposite is true. A well-structured governance culture doesn’t stifle creativity: it channels it into directions that are safer, more sustainable, and more likely to succeed in the market. When teams know the ethical boundaries, compliance expectations, and transparency requirements from the outset, they can design solutions with fewer pivots, less rework, and lower risk of late-stage project derailment.

Governance also encourages experimentation with confidence. Developers are more willing to test bold ideas when they know there’s a framework in place to evaluate potential harms, monitor outcomes, and roll back if necessary. Executives can greenlight projects faster when governance processes give them a clear, data-backed view of risk exposure.

Perhaps most importantly, governance gives innovation a reputational boost. Launching a cutting-edge AI product is impressive, but launching one that can withstand public, regulatory, and ethical scrutiny is game-changing. It’s the difference between being first to market and staying in the market. In this sense, governance is the seatbelt that lets you go faster, knowing you’re protected.

How Mitrix can help

At Mitrix, we offer AI/ML and generative AI development services to help businesses move faster, work smarter, and deliver more value. We help businesses go beyond proof-of-concept AI. We focus on building AI solutions that aren’t just viable, they’re valuable, trusted, and adopted.

For organizations integrating AI governance into their corporate culture, we provide more than just technical expertise. Our engineers design AI systems with built-in compliance, ethical safeguards, and transparent decision-making processes. We help you establish governance frameworks that ensure AI is aligned with your business values, regulatory requirements, and operational realities.

From risk assessment and bias detection to audit-ready documentation and employee training, we make sure your AI is not only powerful but also accountable, so it becomes a trusted part of your culture, not a source of uncertainty. Contact us today!

Summing up

In all fairness, cultural change doesn’t happen overnight. However, integrating AI governance into your corporate culture is no longer a luxury, it’s table stakes for scaling responsibly in an AI-driven world.

Organizations that succeed will treat governance not as friction, but as an accelerant, one that builds customer trust, reduces regulatory surprises, and attracts top talent. Most importantly, it ensures that as your AI systems scale, your values scale with them. So don’t just build smarter machines, but build a smarter organization: one where governance is lived, not laminated.