The AI industry is maturing, and with that comes the need for standardized ways to work with large language models (LLMs). The Model Context Protocol (MCP) has emerged as the first official standard for structuring interactions with LLMs and improving their efficiency across AI-driven systems. But what does this new standard actually mean in practice?

To break it down, we sat down with Arthur Kuchynski, our ML engineer, to discuss how MCP works, why it matters, and what it could mean for the future of AI applications.

Artur is an ML Engineer at Mitrix. I’ve been in software engineering for over seven years, spending the last five diving deep into AI and machine learning. My expertise covers areas like Computer Vision, LLMs, and automation.

Join us as we dive into the technical details, real-world applications, and challenges of implementing this new standard.

Introduction and challenges

Hi Arthur! How’s it going? Could you tell us a bit about yourself and your background?

I love building solutions that make AI development smoother, whether it’s research, data wrangling, model training, or deployment. On a typical day, you’ll find me working with tools like OpenCV, LangChain, Apache Airflow, OpenAI, and various cloud services, including AI-as-a-service (AIaaS) platforms.

Over the years, I’ve tackled projects ranging from Object Detection and Segmentation, and NLP-driven OCR systems to RAG implementations for models like GPT-4o.

So, I guess you could say I’m deeply into making AI practical and efficient. And that’s exactly why I’m here to chat with you about the Model Context Protocol.

That sounds awesome! Okay, let’s get down to the topic of discussion. Can you briefly explain what the MCP is, and why it matters for modern AI-powered development?

MCP is an open, standardized integration protocol released by Anthropic that allows AI and especially Large Language Models (LLMs) to interact with external data sources, APIs, and tools.

It’s crucial for modern AI-powered development because it simplifies integration and enables LLMs to dynamically access real-time information and execute tasks without extensive custom coding.

But what are the key challenges that MCP solves?

Before MCP, building AI systems often involved:

- Custom implementations for each AI application hook into its required context and data format, leading to a lot of duplicated effort to cover this.

- Inconsistent prompt and context logic and different methods for accessing and federating tools and data across different teams and companies.

- The “N x M problem” where a large number of client applications needed to interact with a large number of servers and tools, resulting in a complex pipeline of integrations, each requiring specific development efforts.

Now, MCP creates a uniform protocol to effectively address those challenges.

What was your initial impression when you first learned about MCP?

Initially, MCP impressed me as an innovative and practical solution to longstanding AI integration issues. AI agent development has become a lot faster with IDE-level support and plug-ins for several agent frameworks. For instance, a simple AI agent can be easily implemented within a few hours.

Technical aspects

How does MCP differ from the traditional integration approach between LLM applications and external tools?

Traditional integration typically requires writing bespoke code for each tool, creating fragmented, rigid, and labor-intensive solutions. MCP provides a standardized interface where LLMs autonomously decide which external tools to invoke. That makes integration flexible, scalable, and significantly easier to maintain.

Let me give you an example.

The augmented LLM

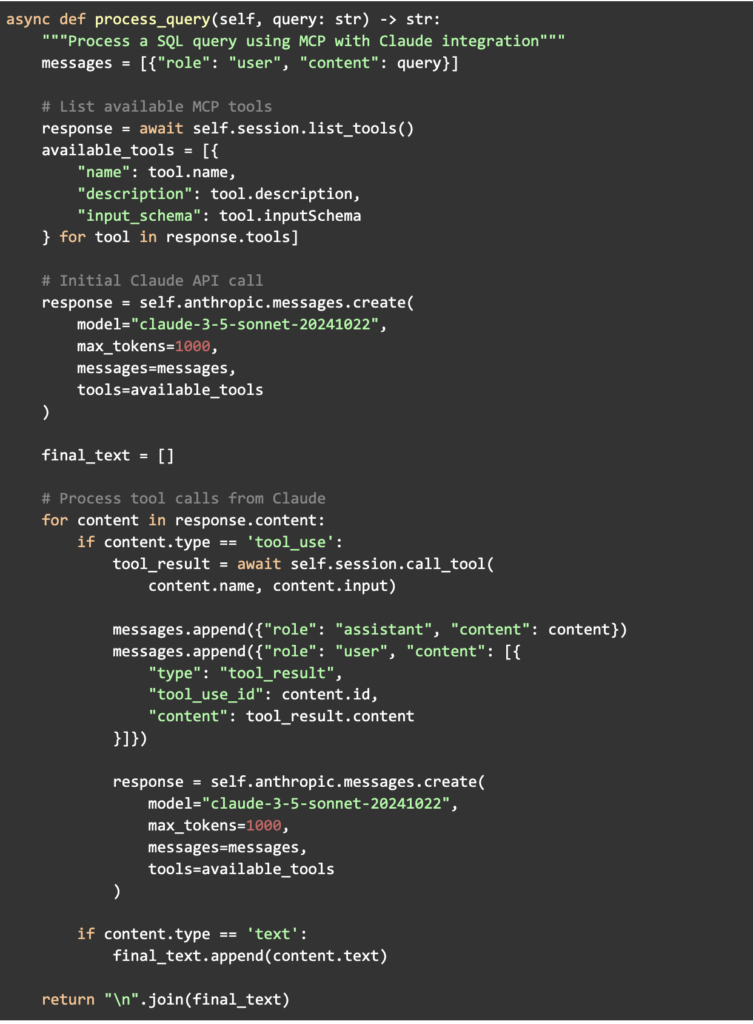

With traditional client integrations, calling a database involves explicitly coded API requests and db clients, to be further post-processed in the agent pipeline. MCP changes that. Here’s how a simple MCP client example might look, handling an SQL query through an LLM like Claude:

How does MCP manage and maintain context across different integrations?

MCP uses a stateful client-server architecture where context can persist across interactions. This continuous connection enables the AI to iteratively query multiple tools, building and maintaining coherent context throughout the interaction, unlike traditional one-off integrations.

Server side

The MCP host server process acts as the container and coordinator:

- It creates and manages multiple client instances

- Controls client connection permissions and lifecycle

- Enforces security policies

- Handles user auth

- Coordinates AI/LLM integration and response sampling

- Manages context aggregation across MCP clients

Clients side

Each MCP client is created by the host and maintains an isolated server connection:

- It establishes one stateful session per server

- Handles protocol negotiation and data exchange

- Routes protocol messages bidirectionally

- Maintains security boundaries between servers

In your opinion, what technologies or frameworks are best suited for integrating MCP?

MCP is well-supported by official SDKs in Python, JavaScript/TypeScript, Java, Kotlin, and C#. Popular frameworks and tools integrating MCP include Anthropic’s Claude and Claude Desktop applications, IDE plugins (VSCode, Cursor), AI orchestration libraries (e.g., LangChain MCP adapters, fast-agent), and Agent UI frameworks like Chainlit.

How does MCP ensure scalability and efficiency in handling context?

MCP ensures these two aspects by using efficient JSON-RPC 2.0 messages with support for streaming and asynchronous tool invocation. I would also add persistent sessions that minimize redundant context exchange by keeping sessions stateful and built-in asynchronous handling for multiple parallel requests and tool calls, improving overall system responsiveness.

Are there any security concerns developers should be aware of when implementing MCP?

Yes, they should. Sure thing – MCP simplifies integration, but developers must ensure security, including explicit user consent, secure authentication mechanisms (like OAuth), proper sandboxing for sensitive operations (e.g., database queries or shell commands), and granular permission settings to mitigate unauthorized access and data exposure risks especially when an Agent is supposed to be calling external tools or implement Human-in-the-Loop verification if possible.

Areas of application and personal experience

Can you share some real-world scenarios where MCP can power AI-driven apps?

Well, there are plenty of them. I’d mention these ones:

- Customer support chatbots. MCP directly queries CRM or databases for personalized responses (e.g., real-time order statuses).

- Personalized recommendations. AI-powered engines dynamically pull data from user profiles and real-time analytics to provide tailored recommendations (e.g., streaming services).

- Developer tools. IDE plugins using MCP to fetch documentation, execute tests, or search repositories are a great way to improve productivity

- Data analysis. Analysts leverage AI to execute multi-step database queries and analytical tasks using natural language, with MCP orchestrating behind-the-scenes operations.

- Speech2text & Image2text and vice versa. While an MCP server allows you to implement any custom tool, that means we are going multimodal at this point and/or can solve complex pipelines e.g. for stuff like content creation (publishing articles, podcasts, audiobooks, and image or video generation).

Have you worked with MCP on any projects? If so, what were the biggest takeaways?

Yes, I’ve integrated MCP into a project that connects an AI agent to internal knowledge bases. As for the takeaways, I’d like to mention reduced integration complexity compared to the FastAPI + LangChain approach. Besides, MCP improved response accuracy and played a critical role in ensuring clear tool descriptions to guide model interactions effectively.

Moreover, it’s not just open-source LLMs that work with MCP. Since we often use OpenAI, its Agents SDK also supports MCP.

How easy or difficult is it for developers to adopt MCP in existing systems?

To tell the truth, adopting MCP is relatively easy, especially with the available SDKs and pre-built connectors. Integration typically requires minimal effort compared to traditional methods.

Are there any common pitfalls developers should avoid when implementing MCP?

Developers should prioritize explicit user consent, follow security best practices for tool invocation, and manage context properly to prevent data leaks and ensure efficient communication between models.

Summing up

Where do you see MCP evolving in the next few years?

I see MCP becoming a go-to standard with broader adoption, stronger remote and cloud integration, and support for more than just text – think images, audio, and beyond. We’ll likely see better tooling for popular IDEs, making it easier for developers to work with. There’s also a good chance of formal standardization, maybe even a centralized MCP registry to help discover and verify integrations more easily.

Are there any improvements or additional features you’d like to see in MCP?

Well, enhancements I’d welcome include improved debugging, built-in caching/queuing mechanisms, stronger sandboxing capabilities, and better integration with conversation memory frameworks and vector stores to unify context management fully.

What advice would you give to developers looking to get started with MCP?

Start small and iterative, try existing MCP connectors and SDKs, and carefully manage security from the outset. Clearly define your integration points from the AI’s and business perspective: it should be clear and relevant to you, your team, and your clients. And, surely, don’t forget to share your experience with the MCP community.

What is the business value of changing the AI app development methodology to MCP protocol?

From my experience at Mitrix, adopting MCP helps speed up development by standardizing tool integrations, so AI apps get to market faster without needing constant rework. It also cuts maintenance costs since a unified approach means less hassle managing MCP servers over time. And when it comes to customer experience, MCP is a game changer: real-time, context-aware interactions let you support more third-party services in your AI app, so you have a competitive edge.

Okay, that must be it. Thanks for the interview, Arthur!

No problem. See you around!

Interested in AI? Check out our case study on LeadGuru, an effective tool for lead generation and management across multiple social media communities.

We love writing about AI. In our blog, we dive deep into AI development, exploring everything from cutting-edge LLM applications to real-world use cases that drive business impact. Whether you’re an engineer, a product manager, or just curious about the future of AI, these articles will give you valuable insights. Check out some of our latest pieces below.