As companies hurry to release AI-powered features, many rely heavily on the MVP (minimum viable product) approach. The idea is clear: build fast, validate with users, iterate later. Although it works beautifully for many types of software, AI is a different story. What’s “viable” for a simple app feature doesn’t always translate when the feature depends on probabilistic models, unpredictable data, or nuanced human interaction.

As a result, many AI MVPs reach production as underdeveloped features: they are difficult to interpret, fragile, costly to maintain, and ultimately left unused. In the worst cases, they damage user trust or the company’s reputation. So, why do so many teams fall into this trap, and how can you avoid it? In this article, you will discover:

- Why traditional MVP thinking often fails when applied to AI-powered features

- The common pitfalls that cause AI MVPs to underdeliver, and how to avoid them

- What defines a minimum valuable product in the context of AI

- A step-by-step AI MVP strategy focused on trust, usability, and impact

- How much it really costs to build and maintain an AI MVP, including hidden expenses

- A real-world example of the MVP trap, and what a better approach looks like

- How Mitrix helps businesses build AI features that are not just viable, but valuable and scalable

What is the MVP trap in AI?

The MVP trap in AI happens when teams apply traditional software MVP strategies to machine learning-driven features, expecting the same results. They ship an AI-powered “something” quickly, hoping it’s good enough to validate or learn from. But AI isn’t a button or a form field; it’s a living system.

Key symptoms of an MVP trap:

- The AI feature technically works (sometimes)

- Users don’t trust it, understand it, or use it

- There’s no feedback loop to improve performance

- It adds complexity but little measurable value

- It looks shiny but solves nothing meaningful

In 2025, AI features need more than functionality. They need reliability, explainability, and clear value-add. When MVPs skip those foundations, the result isn’t a prototype – it’s shelfware, so to speak.

Why AI MVPs often underdeliver

Let’s break down the reasons AI MVPs fail to live up to expectations:

1. No clear success criteria

Traditional MVPs often succeed by being just usable enough. But with AI, “just usable” might mean 70% accuracy, and it’s something that feels broken to users expecting 100%. Without clear thresholds for acceptable model performance or real-world impact, it’s impossible to define what “viable” even means.

2. Weak feedback loops

AI systems need feedback to improve. If your MVP lacks a mechanism to collect user feedback, track accuracy in the wild, or monitor drift over time, it quickly becomes outdated or, worse, misleading.

3. Misaligned expectations

It’s not unusual that stakeholders expect AI to just work. But a half-trained model with limited context often performs inconsistently. When teams ship an MVP with caveats or limitations, they risk disappointing users who expected seamless intelligence, not a glorified guess.

4. Overemphasis on novelty

Many MVPs are built to showcase the AI, not the value it brings. Flashy features like AI-generated summaries, auto-tagging, or chatbots are added because they’re cool (and not because they solve a real user problem). When users can’t figure out why they should care, they don’t.

5. No path to scale or maintenance

An MVP might get a model working with a static dataset and basic UI. But what happens when data changes? What if the model needs fine-tuning, retraining, or labeling? If that path isn’t planned, the MVP becomes a dead end instead of a foundation.

Rather than focusing on the minimum viable version of an AI feature, shift the mindset to a minimum valuable product. Ask: what’s the least we can do to deliver value, earn trust, and learn quickly?

Building smart from the start: your AI MVP strategy

A well-crafted AI MVP proves technical feasibility while validating real-world value with minimal waste. In this section, we’ll explore how to define, scope, and execute AI MVPs that go beyond flashy demos and deliver meaningful outcomes for users and businesses alike. Here’s what that looks like in practice:

1. Start with the user problem, not the model

Don’t start with How can we use GPT-4 here? Start with What’s the most painful part of this workflow? or Where are people wasting time? Then, ask if AI can meaningfully help. AI is not the product. It’s an enabler of better user outcomes.

2. Define value and success upfront

Before writing a line of code, define what success looks like. Is it saving users time? Reducing manual effort by 50%? Getting 90% classification accuracy? If you can’t measure it, don’t ship it.

3. Design for explainability

Users don’t just need predictions: they need to understand why. Even if your MVP isn’t perfect, adding transparency (e.g., We predicted this because…) goes a long way in building trust.

4. Build the feedback loop into V1

Whether it’s thumbs-up/down, edit logging, or model drift tracking: bake in ways to capture what’s working and what’s not. This gives you the data to improve, iterate, and justify continued investment.

5. Plan for scale and support

Even an MVP should answer: how will this model retrain? How will we monitor performance? Who maintains the data pipeline? MVPs that can’t grow will become liabilities, not assets.

Real-world example: from MVP to real value

Let’s say your company builds a helpdesk platform. You want to add an AI assistant that suggests answers to incoming tickets.

An MVP trap would look like:

- Adding a model that suggests answers with no way to edit, track, or flag them

- No visibility into model confidence or rationale

- No process for retraining on newer tickets

A minimum valuable product would:

- Only suggest answers when confidence exceeds a certain threshold

- Let users easily accept, modify, or reject the suggestion

- Include analytics on usage, accuracy, and feedback

- Have a clear plan for continuous improvement based on the labeled ticket

In both cases, you shipped an AI feature. But only one of them actually delivers and improves over time.

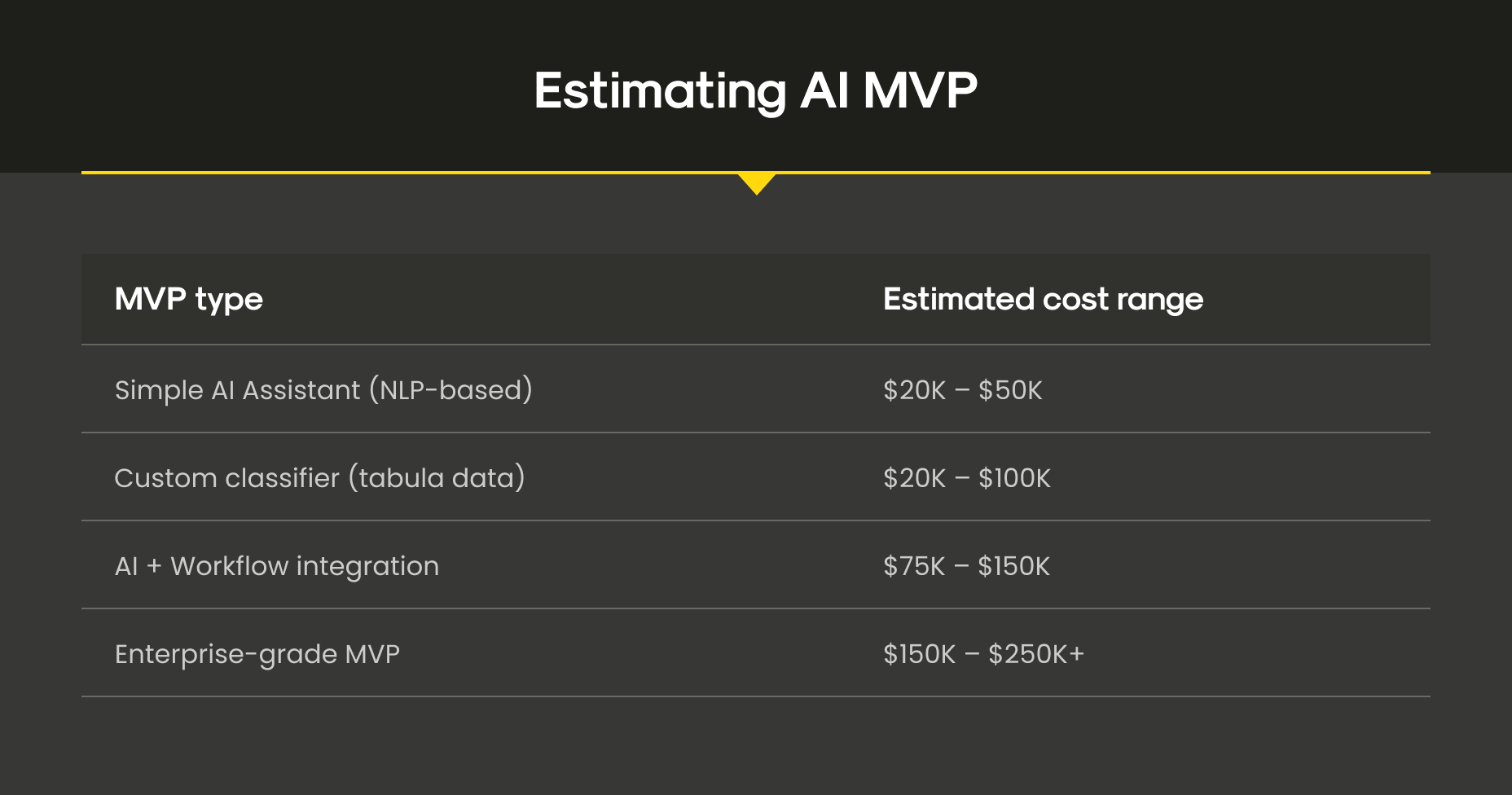

How much does it cost to develop an AI MVP?

To put it simply: it depends. But here’s what we can tell you: the cost of building an AI MVP often exceeds initial estimates, especially when teams underestimate the invisible work that makes AI usable in the real world.

Here’s a breakdown of what typically influences the cost of an AI MVP:

1. Scope and use case complexity

- A simple NLP chatbot using off-the-shelf APIs (like OpenAI or Google) might cost $20K-$50K.

- A custom vision model, fraud detector, or medical classifier could range from $75K to $200K+, depending on the data, validation needs, and domain specificity.

2. Data availability and readiness

You’ll spend less if your data is:

- Clean

- Labeled

- Centralized

But if it’s scattered across departments, unstructured, or lacking labels, expect to allocate 30-50% of your budget to data wrangling and preprocessing.

3. Talent and team composition

You’ll likely need:

- A data scientist (or ML engineer)

- A backend developer

- A product manager (to align AI with user needs)

- Optionally, a UX designer or MLOps engineer

Hiring a dedicated internal team can cost $250K+ annually. Partnering with a specialized AI vendor allows you to move faster without full-time hiring overhead.

4. Model training and infrastructure

- Leveraging pre-trained models via APIs can reduce cost dramatically.

- Training custom models or deploying on private cloud infrastructure adds costs in compute (e.g., GPUs), storage, and DevOps hours.

For MVPs, cloud-based pay-as-you-go platforms like AWS, Azure, or Vertex AI are budget-friendly options. However, be careful: inference and training costs can creep up if usage spikes.

5. Ongoing maintenance

Even MVPs require:

- Performance monitoring

- Model retraining

- Error tracking

- User support

Neglecting post-launch support is a classic MVP trap. We recommend budgeting 10–20% of the initial build cost annually for maintenance, even more if your model relies on real-time data.

How Mitrix can help

At Mitrix, we offer AI/ML and generative AI development services to help businesses move faster, work smarter, and deliver more value. We help businesses go beyond proof-of-concept AI. We focus on building AI solutions that aren’t just viable, they’re valuable, trusted, and adopted.

We bring:

- Cross-functional teams that understand both AI and product strategy

- Proven playbooks for integrating feedback, measuring success, and iterating fast

- Expertise in MLOps, monitoring, and long-term model sustainability

- User-centered design approaches to ensure explainability and adoption

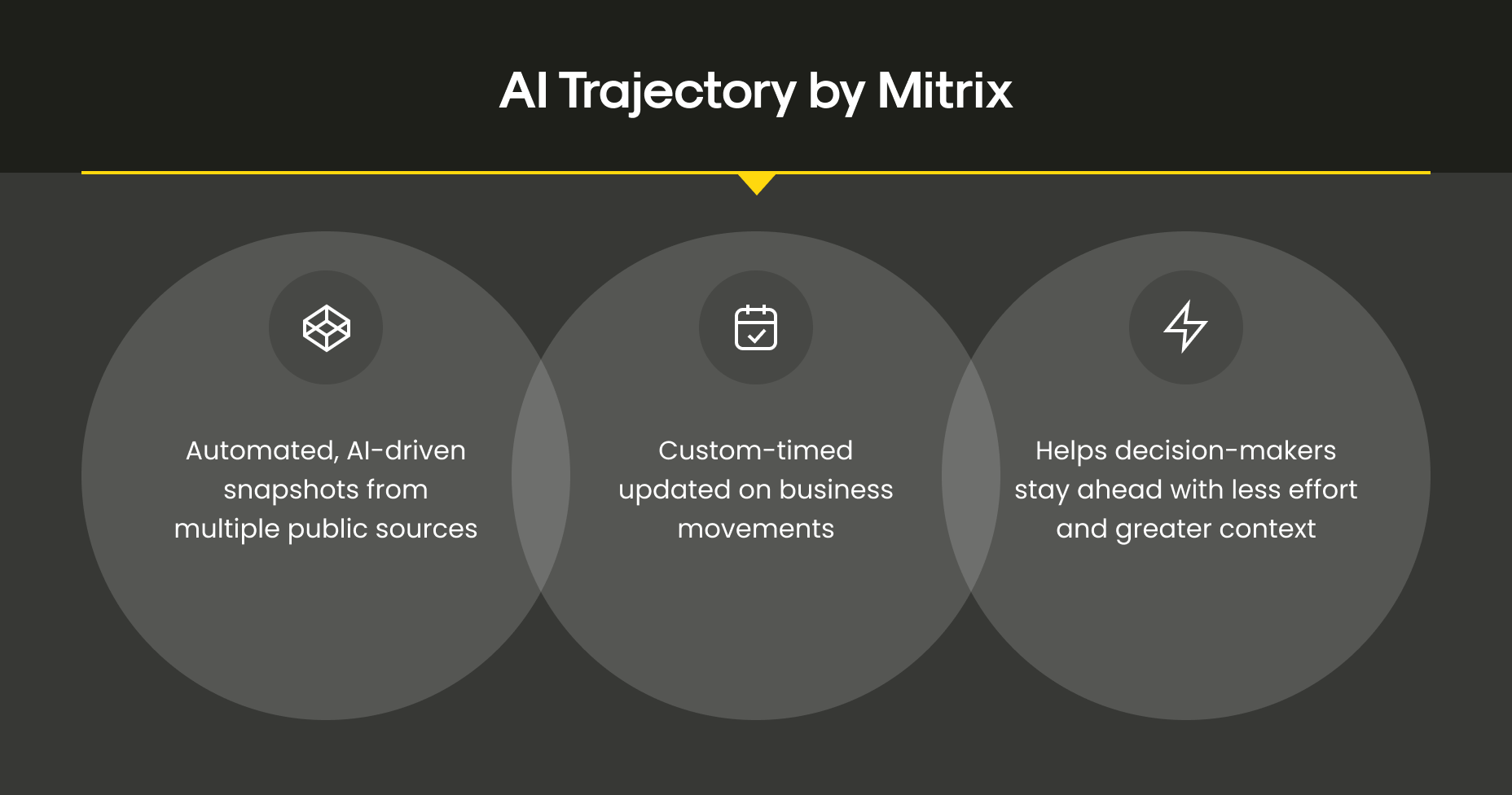

To build AI features that are not only viable but genuinely valuable, having the right tooling is essential. You don’t just need good models: you need infrastructure that supports versioning, visibility, and seamless integration. That’s where tools like Trajectory AI come in.

Developed internally by the Mitrix team, Trajectory AI solidifies your MVP architecture with three essential components:

- MCP Server. Easily embed intelligence into your existing AI workflows, making your systems smarter without rewriting everything from scratch.

- API Layer. Pull real-time snapshots and insights directly into your stack, so your models can stay adaptive and responsive to new inputs.

- Web Frontend. Use a lightweight dashboard to visualize change logs, track decision paths, and monitor behavior over time, all in one place.

With Trajectory AI, your MVP isn’t just a prototype: it’s a stable, scalable foundation for continuous improvement. Whether you’re building your first AI feature or scaling an existing one, we’ll help you avoid the MVP trap and build AI that earns its keep. Let’s talk!

Wrapping up

In the world of AI, building something that works is no longer enough. The hardest part isn’t just model performance: it’s ensuring that performance translates to real value, in the hands of real users. By reframing MVPs as minimum viable products, companies and startup teams can avoid launching gimmicks and start delivering game-changing features.

That shift in mindset changes everything. Instead of rushing to demo a flashy proof of concept, teams focus on solving actual problems, integrating with real workflows, and delivering outcomes users care about. It’s not about showcasing AI for the sake of buzz; it’s about making sure every feature deployed is usable, maintainable, and tied to business goals.