Nowadays, large Language Models (LLMs) power an explosion of modern apps: chatbots, copilots, search assistants, and domain-specific tools. But base models, while flexible, rarely deliver ideal behavior out of the box for real business needs. Fine-tuning adapts an LLM so it performs reliably on your tasks, aligns with policy and tone, and reduces risky outputs.

In this article, you’ll learn:

- The business considerations you must weigh before fine-tuning AI, including compliance, ownership, and ROI.

- The common pitfalls that derail AI projects, and how to avoid overfitting, latency surprises, misaligned objectives, and monitoring gaps.

- The best practices checklist for reliable AI adoption, from clean datasets and measurable objectives to continuous evaluation and audit trails.

- Why aligning AI with business KPIs (not just benchmarks) is essential for measurable, sustainable results.

- How automated monitoring, alerts, and governance help maintain model reliability while freeing teams to focus on strategy.

- The role of iterative improvement, compliance, and human oversight in unlocking AI’s real value.

- How organizations can confidently move past the hype with structured approaches, domain-specific tuning, and operational integration.

Why fine-tuning matters

Out-of-the-box LLMs are generalists, so to speak. However, fine-tuning makes them specialists:

- Domain accuracy. Medical, legal, or financial vocabularies and constraints.

- Tone and compliance. Brand voice, regulatory guardrails, and safety filters.

- Task improvement. Better summarization, classification, code completion, or Q&A.

- Risk reduction. Fewer hallucinations and more grounded citations when paired with RAG.

If you’ve ever asked, “Why does the model make that mistake?”, you’re not alone, and that’s the problem fine-tuning helps solve.

Choose the right base: leaderboards, benchmarks, comparison

Before training, pick a model aligned with your constraints (cost, latency, license). Use resources like an LLM leaderboard and LLM benchmark results for initial filtering. Popular signals:

- LLM leaderboard rankings (Hugging Face, academic leaderboards) show relative strengths across standard tasks.

- LLM benchmark suites (MMLU, GSM8K, HELM, BIG-bench) reveal reasoning and robustness.

- LLM comparison should include inference cost, latency, hardware needs, and licensing (open-source vs. commercial).

Remember: leaderboard-topping models aren’t automatically best for your use case – always validate with your data.

How to fine-tune LLMs: practical steps

Here’s a hands-on roadmap for developers asking how to fine-tune LLM effectively.

1. Define clear objectives

State measurable goals: reduce support deflection by 30%, improve legal-document summarization F1 by X, or cut average response latency to Y ms.

2. Curate quality data

Gather representative examples: support transcripts, policy texts, domain manuals, or annotated Q&A pairs. Clean, labeled, and diverse datasets beat enormous noisy corpora.

3. Pick a method

Options include:

- Full fine-tuning. Update all model weights (high cost; maximal change).

- LoRA / Adapters. Train small low-rank matrices or adapter layers – far cheaper and faster.

- Prompt / prefix tuning. Tune prompts or prefixes; useful for lightweight changes.

- Instruction tuning. Supply many prompt-response pairs to improve instruction-following.

For many teams, LoRA gives the best cost/benefit for how to fine-tune LLMs without massive compute.

4. Training setup

Use established frameworks (Hugging Face, PyTorch Lightning, DeepSpeed). Key settings:

- Batch size, learning rate schedule

- Gradient accumulation for large contexts

- Checkpointing and early stopping

- Validation splits to detect overfitting

5. Evaluate with benchmarks + business metrics

Run standard LLM benchmark tests, but also test on in-domain validation sets. Track business KPIs (reduction in escalations, accuracy on canned queries, time saved).

6. Deploy with guardrails

Add:

- RAG (retrieval-augmented generation) for grounding answers

- Output filters and safety layers

- Monitoring for drift and hallucinations

- A human-in-the-loop fallback for risky queries

Fine-tuning is iterative: monitor, collect failures, and retrain.

Cost and infrastructure realities

Fair enough, LLM training can be expensive. A few pointers:

- Small models (7B) trained with LoRA are affordable on a modest GPU cluster.

- Full fine-tuning of larger models (70B+) may require significant cloud spend. Always estimate training compute and inference costs.

- Consider hybrid: prompt engineering + RAG for quick wins, reserve fine-tuning for high-value, recurring tasks.

Key takeaway

Not all fine-tuning is equal, so you should match the model size and method to your budget. Small models trained with LoRA can deliver strong results at a fraction of the cost, while large-scale LLM training offers more power but comes with steep infrastructure bills. The most practical path is often a hybrid approach: rely on prompt engineering and retrieval-augmented generation (RAG) for quick, flexible wins, and reserve fine-tuning for high-value, repeatable workloads where the investment truly pays off.

Benchmarks and leaderboards: use them wisely

Public LLM leaderboards and benchmarks are useful but limited:

- They provide apples-to-apples LLM comparison on standard tasks.

- They rarely reflect domain-specific performance; create custom benchmarks based on your data.

- Use leaderboards to shortlist models, then validate with company data and real scenarios.

Key takeaway

Leaderboards and benchmarks are valuable for initial LLM comparison, but they only show part of the picture. They rarely capture the nuances of your domain or data. Treat them as a starting point, then validate models with custom benchmarks grounded in your real-world use cases.

Business considerations: compliance, ownership, and ROI

When evaluating AI model training for your business, technical performance isn’t the only factor. Decisions must also account for compliance requirements, control over the technology, and measurable business impact. Understanding these considerations ensures your AI initiatives deliver both value and security.

- Compliance. In healthcare/finance, anonymize and secure training data. Check vendor policies and data residency.

- Vendor lock-in. Closed-source commercial APIs may limit tuning options. Open models (with permissive licenses) give more control.

- ROI. Track direct outcomes: reduced support load, faster time-to-resolution, and higher conversion to justify training investment.

Balancing compliance, ownership, and ROI helps organizations make informed choices about AI adoption. By safeguarding data, maintaining flexibility with vendors, and tracking tangible outcomes, businesses can confidently invest in AI solutions that drive real operational and financial benefits.

Results

- Healthcare assistant. Fine-tuning an LLM on de-identified clinical notes improves discharge summary accuracy and reduces clinician editing time.

- E-commerce. Fine-tuned product Q&A model on internal reviews and specs: conversion increases on guided recommendations.

- Legal tech. A model fine-tuned on precedent and statutes reduces contract review turnaround with flagged uncertainty needing a lawyer’s sign-off.

These wins aren’t “anything LLM” plug-and-play successes: they came from targeted training and careful LLM comparison choices.

Common pitfalls and how to avoid them

Even the best AI models can stumble if common pitfalls aren’t addressed. From overfitting to misaligned objectives, understanding where projects often go wrong is key to delivering reliable, impactful AI solutions.

- Overfitting. Keep validation sets and test generalization.

- Ignoring latency/cost. Test inference speed early.

- Neglecting monitoring. Track hallucination rates and user feedback.

- Misaligned objectives. Measure business KPIs, not just benchmark scores.

By proactively managing these risks, such as validating performance, monitoring costs and outputs, and aligning models with business goals, organizations can avoid setbacks and ensure their AI initiatives deliver consistent, real-world value.

Best-practices checklist

A structured approach can make the difference between an AI project that thrives and one that stalls. Following a set of proven best practices ensures your models are effective, reliable, and aligned with business goals.

- Define measurable objectives.

- Build a clean, representative training and validation set.

- Start with adapter methods (LoRA) before full fine-tuning.

- Use RAG to anchor responses to trusted sources.

- Automate evaluation against LLM benchmarks and your internal tests.

- Monitor and maintain an update cadence (continuous fine-tuning).

- Preserve audit trails and provenance for compliance.

By defining objectives, maintaining clean data, leveraging incremental tuning, and continuously monitoring performance, organizations can keep their AI models accurate, compliant, and consistently valuable over time.

The near future: continuous, automated fine-tuning

Expect fine-tuning to move toward continuous pipelines: automated data ingestion, periodic retrains, and model selection based on LLM leaderboard-style internal metrics. Businesses will maintain private LLM benchmark suites tailored to their domains.

This shift allows organizations to keep models aligned with evolving data and business needs. Instead of static snapshots, AI systems will adapt dynamically, reducing drift, improving accuracy, and ensuring that outputs remain relevant to current operational contexts. Teams will increasingly rely on automated alerts and dashboards to monitor performance and intervene only when necessary, freeing human resources for higher-level strategy.

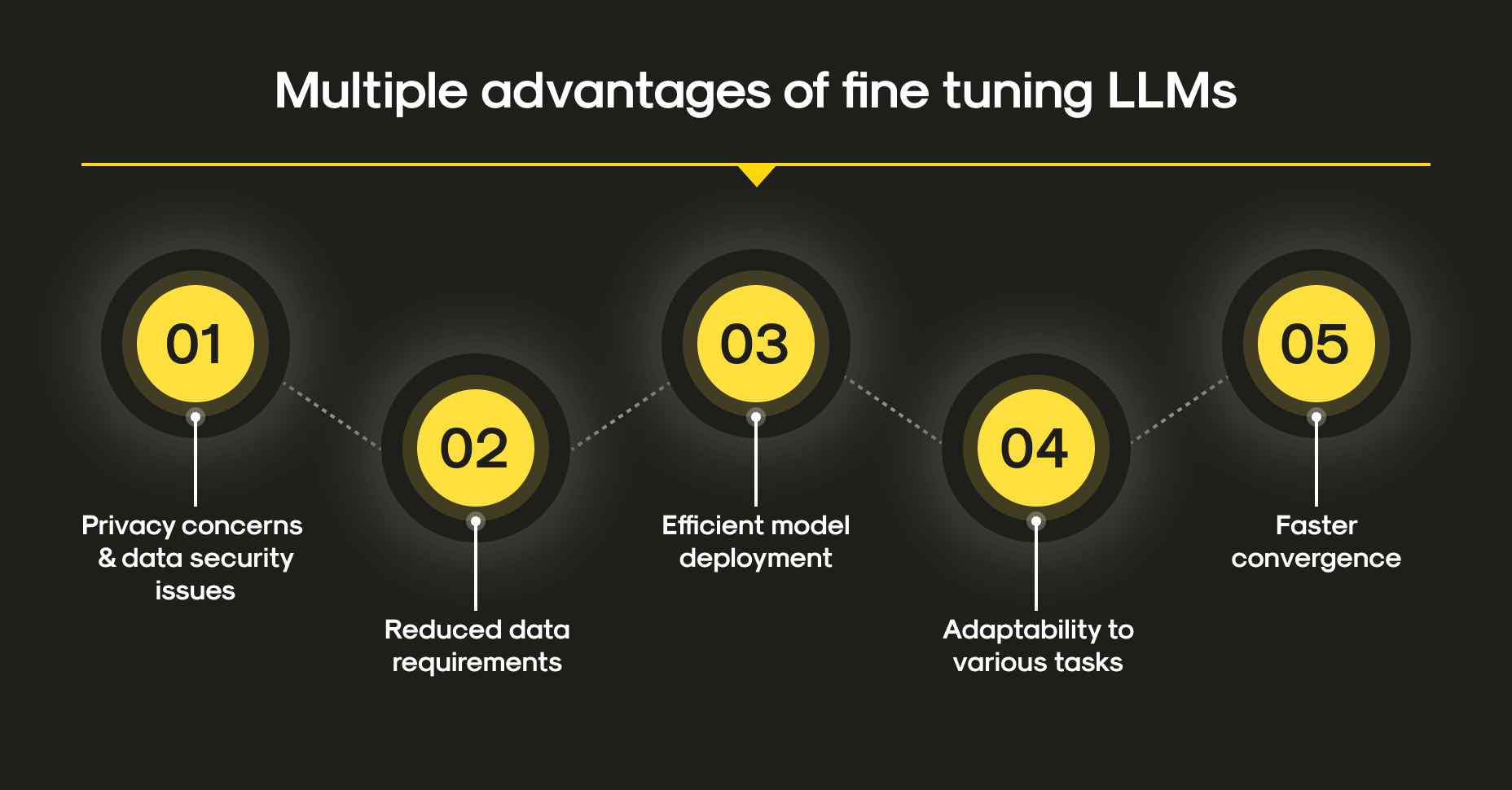

Multiple advantages of fine tuning LLMs

Moreover, continuous fine-tuning fosters better compliance and auditability. By maintaining versioned datasets, tracking retraining events, and documenting model changes, organizations can satisfy regulatory requirements and internal governance policies. This approach also opens the door for experimentation: teams can safely test new training strategies, architectures, or adapters without disrupting core business operations.

How Mitrix can help

At Mitrix, we deliver AI/ML and generative AI development services that help companies:

- Design intelligent bots that understand supply chain workflows

- Integrate chatbots with tracking systems, CRMs, ERPs, and TMSs

- Build multilingual, multi-channel support agents (WhatsApp, web, SMS)

- Monitor performance and apply machine learning for ongoing improvements

We also design end-to-end fine-tuning pipelines: selecting base models (LLM comparison), preparing data, running cost-efficient LLM training (LoRA & instruction tuning), and deploying with monitoring and compliance. We also benchmark-tuned models against public leaderboards and custom domain tests so you know the real business impact.

We help companies turn AI hype into practical outcomes:

- Build AI agents tailored to your workflows

- Integrate systems seamlessly across CRM, ERP, and support platforms

- Monitor performance and continuously improve AI outputs

- Ensure compliance, ethical standards, and reliable operation

Summing up

Fine-tuning is the bridge from powerful generic LLMs to practical, reliable business tools. Don’t treat it as optional experimentation, but treat it like product development. Use LLM leaderboards and benchmarks to inform choices, prefer efficient methods like LoRA where possible, and measure success against business outcomes. Learn how to fine-tune LLM well, and you’ll turn generic capability into sustained competitive advantage.

Ultimately, the value of fine-tuning isn’t just about squeezing out a few extra benchmark points or climbing an LLM leaderboard. It’s about aligning anything LLM-related with the specific workflows, compliance requirements, and customer expectations of your business. Treat fine-tuning as an iterative process (meaning combining technical rigor with real-world feedback), and it will become the cornerstone of AI systems that don’t just perform well in comparison charts, but actually deliver meaningful impact where it matters most.