As of today, AI-generated code tools like GitHub Copilot, Amazon CodeWhisperer (now featuring all its capabilities in Amazon Q Developer), and Tabnine are revolutionizing software development. Developers are shipping features faster, automating tedious tasks, and reducing time-to-market. But with great power comes a whole stack of new risks, and most of them are lurking right behind that autocomplete suggestion.

From security vulnerabilities to license compliance, businesses must strike a careful balance between the benefits of productivity and the hidden pitfalls of using AI-generated code. Fancy getting a security audit checklist to help companies stay on the safe side of innovation? Grab a coffee and jump in. In this article, you’ll discover:

- How AI code generation tools like GitHub Copilot and Amazon Q Developer are transforming software development, and where the hidden dangers lie

- The top five risks of using AI-generated code, from insecure logic to license violations and shadow code

- A 10-step security audit checklist designed specifically for teams using AI in their development workflows

- Pro tips for embedding governance and security into your DevOps pipeline without slowing down innovation

- What responsible AI adoption looks like in forward-thinking organizations

- How Mitrix helps companies build AI-powered systems that are not only fast and smart, but also secure, maintainable, and aligned with real-world business goals

Why AI-generated code is so tempting

Ask them and they’ll tell you: developers are under pressure. Deadlines are tight, bugs are relentless, and stakeholders want results. Enter AI code generation tools, the digital sidekick that promises to:

- Autocomplete boilerplate code

- Generate test cases

- Suggest architecture patterns

- Convert legacy code to modern syntax

- Catch common errors in real time

All in all, the productivity gains are undeniable. A 2023 GitHub study showed that developers using Copilot completed tasks 55% faster. Even junior devs gained confidence by reducing repetitive typing and exploring unfamiliar languages or frameworks.

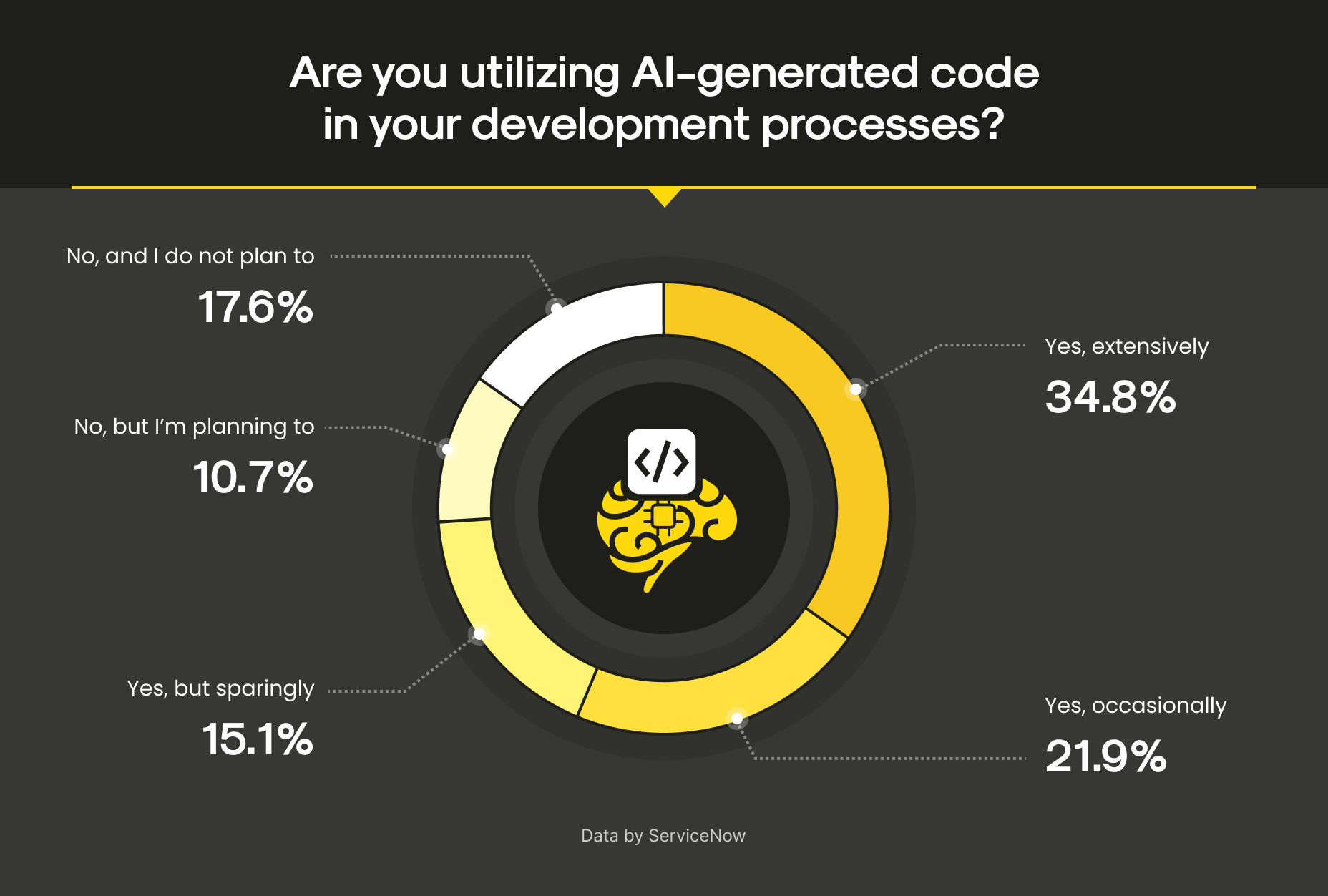

AI-generated code use

But while AI-generated code boosts velocity, the question is: what’s sneaking into your codebase under the hood? Let’s find out!

The risks: what can go wrong

1. Security vulnerabilities

AI tools can generate insecure code that seems syntactically correct but is functionally flawed. Think: unsanitized inputs, broken authentication flows, or hardcoded secrets. If left unchecked, these can create serious security liabilities.

2. Code provenance issues

Most generative models are trained on large volumes of publicly available code, often from open-source repositories. Suppose your AI assistant spits out code that’s a direct (or slightly modified) copy of GPL-licensed material. In that case, it means that you may have just introduced a license violation into your proprietary product.

3. Lack of context awareness

AI code suggestions aren’t fully aware of your application’s architecture, business logic, or environment. That means code can “fit” locally but break things globally.

4. Dependency on third-party APIs

When developers use AI tools to write code that integrates with external APIs, they might overlook proper error handling or security constraints, relying on the AI’s incomplete understanding of those services.

5. Shadow code

Developers may paste AI-generated snippets into production code without a full understanding. This results in “shadow code”, or fragments no one fully understands that create long-term maintainability nightmares.

Key takeaway. If your company wants the gains without regret, then embedding a robust auditing approach into your SDLC is essential. Security isn’t a one-time thing, no. It’s a mindset and a recurring process. Here’s a security audit checklist tailored for AI-generated code.

10-step AI code security audit checklist

AI can write code faster than ever, but speed shouldn’t come at the cost of security. As AI-generated code enters production pipelines, so do new risks: from subtle bugs and insecure patterns to license violations and forgotten secrets. Just because it compiles doesn’t mean it’s safe. This checklist will help your team vet AI-generated code with a security-first mindset, blending traditional best practices with new-age AI realities.

1. Verify input sanitization

Ensure the code properly handles user inputs. All inputs must be validated and sanitized to prevent injection attacks like SQLi or XSS.

- Check for unsanitized forms or API endpoints

- Use secure libraries for input validation

- Avoid writing your own parsers unless you’re a cryptography PhD

2. Conduct static and dynamic analysis

Run your codebase through static analysis tools (e.g., SonarQube, Semgrep) and dynamic application security testing (DAST) tools to uncover hidden vulnerabilities.

- Don’t skip scanning AI-generated test cases

- Look for insecure patterns like use of

eval(), hardcoded tokens, or outdated libraries

3. Check for license violations

Scan for reused code fragments that might contain protected intellectual property or incompatible licenses.

- Use Software Composition Analysis (SCA) tools

- Document sources of any third-party code used (even if suggested by an AI)

4. Enforce code review protocols

Treat AI-generated code like junior dev contributions: everything must be reviewed, questioned, and tested.

- Use PR templates to prompt security checks

- Don’t allow blind copy-paste from AI suggestions into production

5. Ensure authentication and authorization logic

Check for weaknesses in identity and access management.

- Ensure authentication tokens aren’t stored insecurely

- Avoid default credentials or hardcoded login flows

- Validate access control at the resource level

6. Protect secrets and environment variables

If your AI code references API keys, database credentials, or tokens, it’s time to raise the red flag.

- Never store secrets in code

- Use secret managers (e.g., AWS Secrets Manager, Vault)

- Use environment variables safely and encrypt at rest

7. Validate API usage

When AI generates API calls, verify:

- Proper error handling is implemented

- Rate limits and retries are respected

- API authentication and scopes are correctly applied

8. Benchmark performance and resource use

AI may suggest “creative” solutions, but some of them may be costly in terms of performance.

- Run load tests

- Look for inefficient loops or memory-heavy operations

9. Avoid overfitting to training data

Sometimes the AI “remembers” specific open-source code from training and reproduces it. Watch out for:

- Overly polished or familiar-looking code blocks

- Rare algorithms copied verbatim

10. Train developers to think critically

The biggest risk isn’t AI, but uncritical acceptance. Provide regular training on:

- Reviewing and testing AI-generated code

- Understanding AI limitations

- Encouraging ethical, security-first development

Pro tips: embedding governance into your DevOps

A checklist is a great start, but real security comes from culture. As AI becomes a co-pilot in development, teams need more than just tools. They need guardrails that integrate seamlessly into the way they already work. Think of it as shifting left and thinking ahead. Here’s how to build governance into your DevOps without slowing down your pipeline. Beyond the checklist, here’s how to bake AI security into your development culture.

- Adopt AI coding guidelines. Just as you have style guides or naming conventions, establish clear internal rules for when and how AI suggestions can be used.

- Use secure-by-default templates. Provide pre-vetted AI prompts or code templates for common tasks like form validation, auth handling, etc.

- Track AI use in version control. Tag AI-generated contributions in Git commits for easier auditing later.

- Segment AI usage in environments. Allow experimentation in dev and staging, but apply tighter controls in production codebases.

- Create a “license firewall”. Block code suggestions that contain certain license signatures unless verified by legal teams.

What the future looks like

The future of coding is not man vs. machine: it’s man plus machine. When used responsibly, AI code generation boosts productivity, bridges skill gaps, and makes dev work more enjoyable.

We’re already seeing responsible AI adoption models:

- GitHub Copilot now includes an AI code scanning feature to warn developers of insecure patterns.

- Enterprises are embedding AI policy modules in their IDEs to enforce compliance in real time.

- Open-source communities are establishing AI-attribution tags to track AI-generated contributions.

The key is to make AI-generated code auditable, explainable, and secure by default. In short, treat AI like an enthusiastic intern: full of energy, but everything they touch needs to be double-checked.

How Mitrix can help

At Mitrix, we offer AI/ML and generative AI development services to help businesses move faster, work smarter, and deliver more value. We help businesses go beyond proof-of-concept AI. We focus on building AI solutions that aren’t just viable, they’re valuable, trusted, and adopted.

We don’t just build models. Our team builds intelligent systems that work where it matters most: in your day-to-day operations, with your real users, under real constraints.

Our approach to AI development includes:

- Persistent context and memory design

- RAG systems that blend LLMs with real-time data

- Session-aware UX and interaction design

- Monitoring tools for drift, feedback, and behavior over time

Whether you’re building a customer support agent, healthcare assistant, or analytics copilot, we help you avoid context collapse, so your AI performs predictably, safely, and effectively. Fair enough, AI isn’t magic. But with the right architecture and guardrails, it can feel like it is. Do you need help architecting AI systems that keep their head in the game? Let’s talk!

Wrapping up

AI-generated code can supercharge your development efforts, but only if you treat it with the same rigor you would any mission-critical tool. The thing is, productivity without security is a trap. By adopting a proactive audit mindset, following structured guidelines, and training your developers to work alongside AI, you can enjoy the best of both worlds: smarter, faster code and a safer, more secure product.

As AI tooling matures, teams that combine speed with scrutiny and automation with accountability will thrive. Security isn’t a speed bump; it’s part of the engine. The answer is easy: build with care, secure with intent, and you’ll lead the competition.