In 2025, businesses across industries understand that raw intelligence isn’t enough. What really counts is the ability to remember what matters, and that’s where context comes in. And when it breaks down, even the most powerful systems stumble.

This phenomenon has a name: context collapse. It’s that moment when your AI assistant forgets what you said five seconds ago, recommends a product you already bought, or delivers a confident but completely irrelevant response. In this article, you will discover:

- What context collapse is and why it undermines even the smartest AI systems

- The most common places where context collapse occurs, and why it’s more than a UX flaw

- The technical and organizational causes behind AI’s loss of context

- The real-world consequences of context collapse, from user frustration to reputational damage

- Proven strategies to prevent context collapse in AI-powered features

- What makes a context-aware AI system, and how it differs from typical LLM-based solutions

- How Mitrix helps companies build AI systems that remember, adapt, and actually deliver

What is context collapse?

In simple terms, context collapse happens when an AI system loses track of the relevant information it needs to generate a coherent response.

If an analogy helps: imagine giving someone directions to your house, only for them to forget the first half halfway through the conversation. That’s context collapse, but instead of an awkward guest, you’re dealing with a chatbot, a virtual assistant, or a machine learning model in a mission-critical system.

This breakdown can happen for many reasons:

- Limited memory or context window

- No linkage between previous user inputs

- Inability to pull or validate real-time information

- Multiple users or shifting goals in a single session

When context is lost, AI loses grip on the conversation or task at hand. The result? Irrelevant replies, hallucinated outputs, or actions that feel robotic rather than intelligent.

Where context collapse happens most

Context collapse is a recurring failure that erodes the value of AI systems. You’ll find its fingerprints across industries, tools, and user experiences. Sometimes it’s subtle, say, a missed cue or off-target reply. Other times, it’s more obvious, like a system that feels like it’s starting from zero every time you interact with it. Let’s look at where context collapses most commonly strikes, shall we?

Conversational AI

Chatbots forget earlier messages or repeat previously answered questions. For instance, a support bot might suggest “Try resetting the device” even after you’ve said you already did.

Healthcare

A clinical decision-support system gives recommendations based on population data instead of patient-specific history. The model didn’t factor in allergies, conditions, or past treatments, because that context was missing.

E-commerce

Recommendation engines suggest items the user has already purchased. Why? Because they lost transactional history or failed to align it with real-time intent.

BI Tools

Analytics copilots reference outdated KPIs, misinterpret current user goals, or confuse departments, simply because the conversation shifted, and the system didn’t keep up. In short: context collapse is everywhere. And it’s more than a UX issue – it’s a trust issue.

Why context collapses in AI systems

Let’s break down the core technical and strategic reasons behind context collapse.

1. Short context windows

Most language models operate within fixed token limits, say, 4.000 or 32.000 tokens. Once you hit the limit, the older context is truncated. That might work for short answers, but not for multi-turn interactions or dynamic workflows.

Even long-context models can be misused if prompt engineering doesn’t carefully carry over important inputs.

2. No grounding in external systems

AI systems often lack access to external sources of truth like databases, CRMs, or real-time APIs. This means they’re guessing context based on language alone. Unsurprisingly, they often get it wrong.

3. Multi-intent or multi-user confusion

In shared environments (e.g., a chatbot serving both customers and staff), models can’t always distinguish roles or change intent. One user’s input bleeds into another’s expected output, creating chaos.

4. Ambiguous or missing signals

Context isn’t always explicit. It may live in tone, timing, prior interactions, or domain knowledge. If the AI doesn’t capture these subtle cues, it can’t “read the room.”

5. No memory or feedback loop

Many models operate statelessly. They don’t remember you from one session to the next, and they don’t improve based on your feedback. That’s a recipe for repetitive, frustrating interactions.

The consequences of context collapse

Let’s face it: when AI systems collapse context, they stop being useful. It doesn’t matter how advanced the model is, how much training data it’s consumed, or how impressive the demos were. If it can’t remember what matters, it breaks the user experience.

Here’s what that looks like in real life:

- User frustration. “Why isn’t this thing listening to me?”

- Loss of trust. Especially dangerous in sensitive domains like healthcare, legal, or finance

- Wasted time. Users have to re-explain themselves, re-check results, or abandon the system entirely

- Shelfware risk. Systems that consistently fail to understand context are left unused

- Reputation damage. Customers and stakeholders question the company’s competence

And here’s the kicker: even high-performing models can fall into this trap. It’s not a matter of IQ, it’s a matter of memory.

How to prevent context collapse

Context collapse isn’t inevitable. Smart design choices and the right architecture can make a big difference.

1. Use long-context or memory-augmented models

Modern LLMs like GPT-4o, Claude 3.5, or Gemini 1.5 offer longer context windows. But even these need to be used carefully. For high-stakes use cases, combine them with external memory, like vector databases or user profiles.

Persistent memory enables AI to recall important details across sessions: names, preferences, constraints, goals.

2. Integrate grounded retrieval systems

Pull in relevant facts from structured sources, like product databases, support articles, or medical guidelines, using retrieval-augmented generation (RAG). This lets the AI stay grounded in reality, not just pretraining.

3. Design for intent shifts

Build user flows that detect and handle changes in topic or goal. Use intent detection or conversation segmentation models to split sessions intelligently.

4. Log, monitor, and iterate

Capture metadata and feedback signals to track context success or failure. Was the AI off-topic? Did the user rephrase the same question three times? Use these signals to refine model prompts, logic, or architecture.

5. Add explainability and clarification

When context is ambiguous, don’t fake confidence, just ask. Users are far more forgiving of clarification than hallucination.

6. Define ownership and guardrails

Who ensures the AI is behaving contextually? Data scientists? Product managers? Create a feedback loop between AI builders and real users. Context is a moving target as it needs continual tuning.

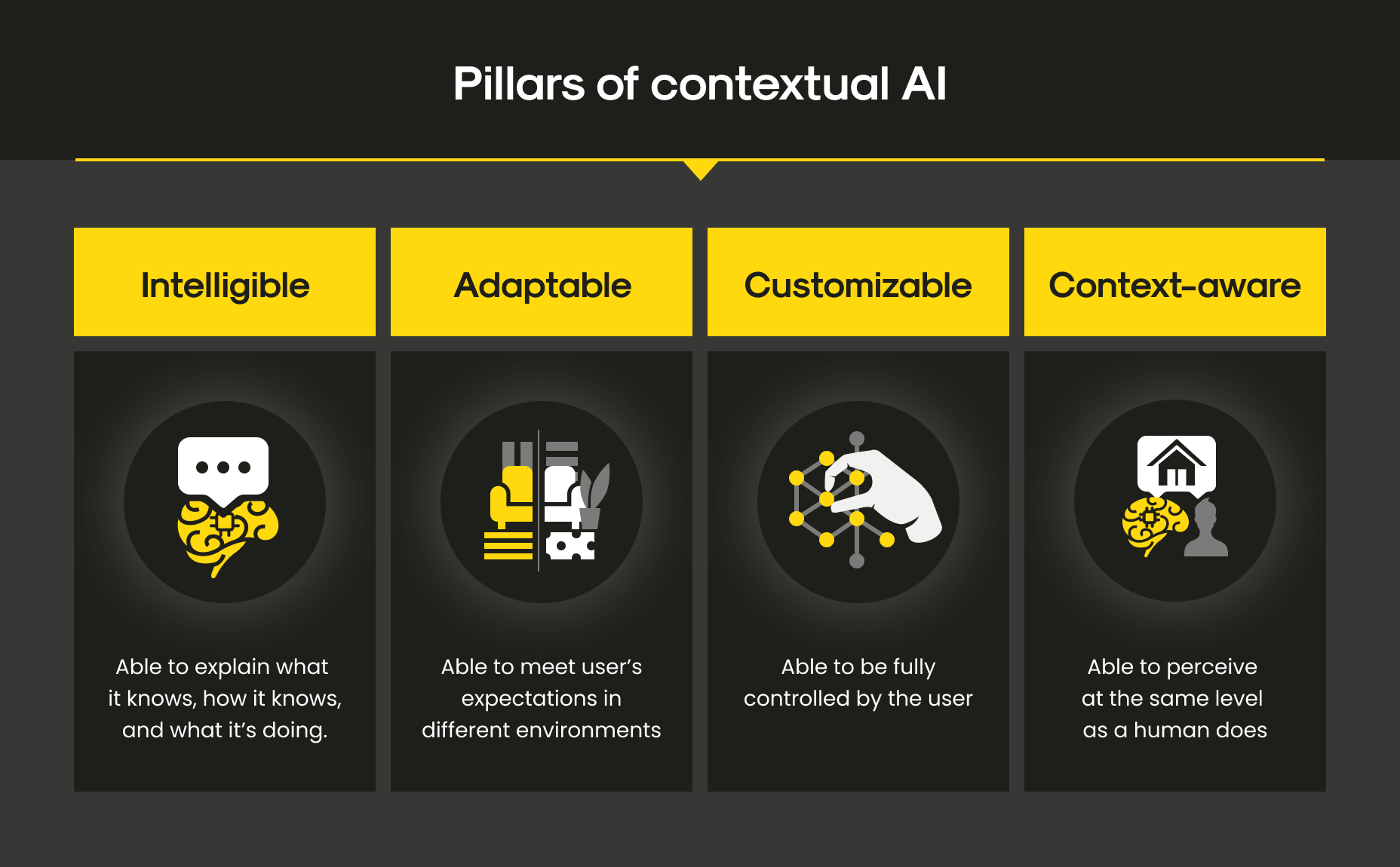

A better future: context-aware AI systems

As of today, context-aware AI is no longer a buzzword, it’s the difference between novelty and necessity, between tools that impress in a demo and systems that actually deliver in the wild. In a world flooded with models that can generate, summarize, and predict, what truly sets the next generation apart is not what they know, but what they remember, understand, and adapt to.

No cut a long story short, context is the backbone of meaningful AI interaction. Without it, even the smartest model becomes a blunt instrument, repeating patterns without understanding, offering answers without insight.

Here’s what the next generation of AI systems will prioritize:

- Semantic memory layers that retain relevant past interactions

- Multi-agent architectures where specialized AI agents manage roles, context, and intent separately

- Continuous learning pipelines where models improve over time based on feedback, not just retraining

And most importantly: AI will become more aligned with people, their workflows, constraints, and goals. Not just inputs and outputs.

How Mitrix can help

At Mitrix, we offer AI/ML and generative AI development services to help businesses move faster, work smarter, and deliver more value. We help businesses go beyond proof-of-concept AI. We focus on building AI solutions that aren’t just viable, they’re valuable, trusted, and adopted.

We don’t just build models. Our team builds intelligent systems that work where it matters most: in your day-to-day operations, with your real users, under real constraints.

Our approach to AI development includes:

- Persistent context and memory design

- RAG systems that blend LLMs with real-time data

- Session-aware UX and interaction design

- Monitoring tools for drift, feedback, and behavior over time

Whether you’re building a customer support agent, healthcare assistant, or analytics copilot, we help you avoid context collapse, so your AI performs predictably, safely, and effectively. Fair enough, AI isn’t magic. But with the right architecture and guardrails, it can feel like it is. Need help architecting AI systems that keep their head in the game? Let’s talk!

Wrapping up

Nowadays, context collapse is the Achilles’ heel of modern AI. What matters isn’t how smart your model is, it’s whether it stays relevant. If your AI system forgets what matters, users will forget your AI system.

To fix this, shift your focus from short-term output to long-term continuity. Design with context in mind. Invest in grounding, memory, and alignment with user goals. And never assume that accuracy alone equals usefulness. Because in the end, intelligence without context isn’t intelligence at all.